TensorFlow.js allows web developers to easily build and run browser-based Artificial Intelligence apps using only JavaScript.

Are you a web developer interested in Artificial Intelligence (AI)? Want to easily build some sweet AI apps entirely in JavaScript that run anywhere, without the headache of tedious installs, hosting on cloud services, or working with Python? Then TensorFlow.js is for you!

This paradigm-shifting JavaScript library bridges the gap between frontend web developers and the formerly cumbersome process of training and taming AIs. Developers can now more easily leverage Artificial Intelligence to build uniquely responsive apps that react to user inputs such as voice or facial expression in real time, or to create smarter apps that learn from user behaviour and adapt. AI can create novel, personalized experiences and automate tedious tasks. Examples include content recommendation, interaction through voice commands or gestures, using the cellphone camera to identify products or places, and learning to assist the user with daily tasks. As the ever-accelerating AI revolution works its way into smart frontend applications, it might also be a handy skill to impress your boss or prospective employers with.

In Part I of this series we will briefly summarize the advantages of Tensorflow.js (TFJS) over the traditional backend-focused Python-based approaches to show you why it’s such a big deal.

Artificial Intelligence, Liberated

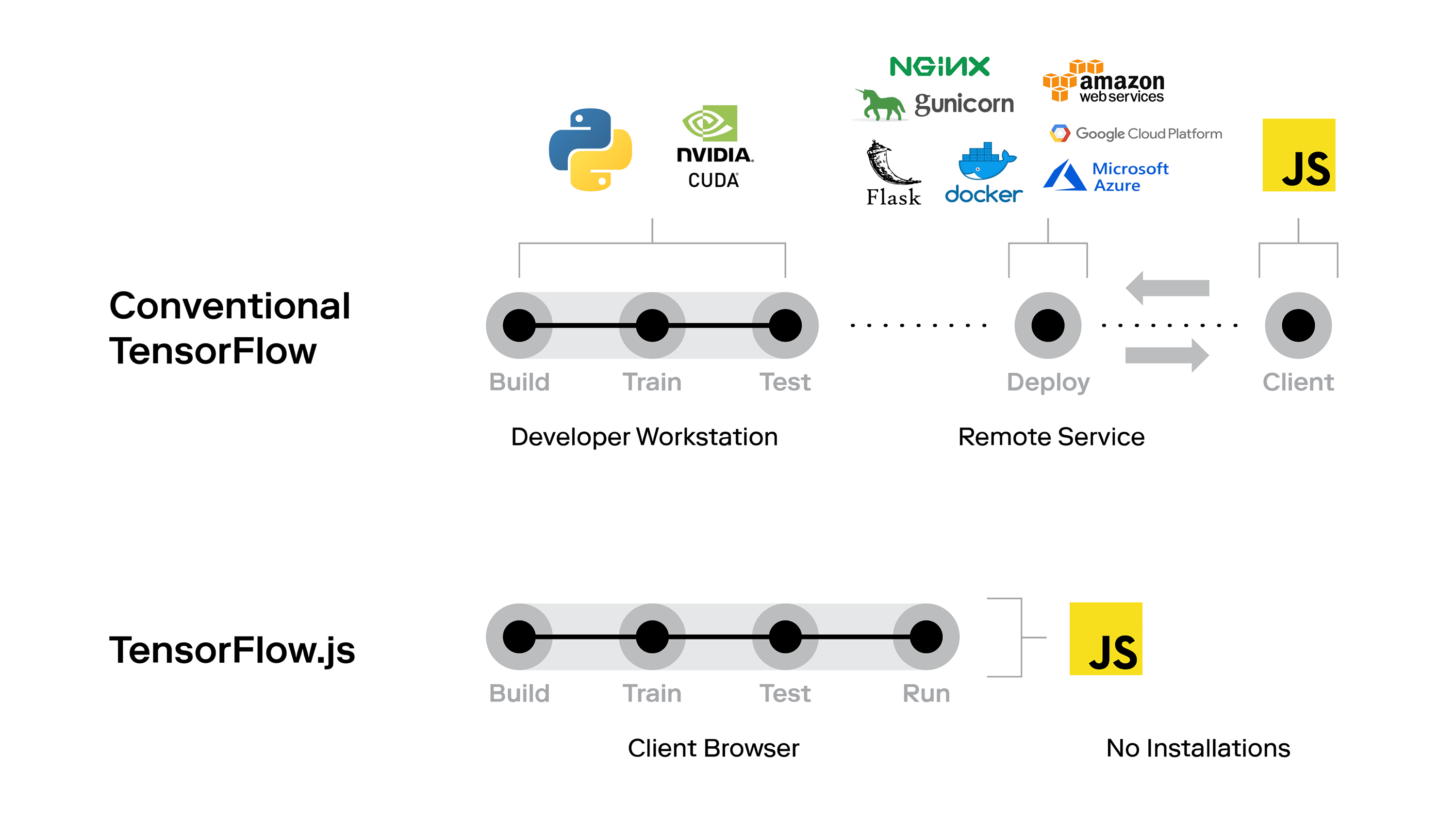

In the past, many of the best Machine Learning (ML) and Deep Learning (DL) frameworks required fluency in Python and its associated library ecosystem. Efficient training of ML models required the use of special-purpose hardware and software, such as NVIDIA GPUs and CUDA. To date, integrating ML into JavaScript applications often means deploying the ML part on remote cloud services, such as AWS Sagemaker, and accessing it via API calls. This non-native, backend-focused approach has likely kept many web developers from taking advantage of the rich possibilities that AI offers to frontend development.

Not so with TensorFlow.js! With these obstacles gone, adopting AI solutions is now quick, easy, and fun. In Part 2 of this series, we’ll show you just how easy it is to create, train, and test your own ML models entirely in the browser using only JavaScript!

Besides allowing you to code in JavaScript, the real-game changer is that TensorFlow.js lets you do everything client-side, which comes with several advantages:

Apps are easy to share

Provide the user with a URL, and voilà, they are interacting with your ML model. It’s that simple! Models are run directly in the browser without additional files or installations. You no longer need to link JavaScript to a Python file running on the cloud. And instead of fighting with virtual environments or package managers, all dependencies can be included as HTML script tags. This lets you collaborate efficiently, prototype rapidly, and deploy PoCs painlessly.

The client provides the compute power

Training and predictions are offloaded to the user’s hardware. This eliminates significant cost and effort for the developer. You don’t need to worry about keeping a potentially costly remote machine running, adjusting compute power based on changing usage, or service start-up times. Forget about load balancing, microservices, containerization or provisioning elastic cloud compute capabilities. By removing such backend infrastructure requirements, TensorFlow.js lets you focus on creating amazing user experiences! However, we must still take care that the client’s hardware is powerful enough to provide a satisfying experience given the compute demands of our AI models.

Data never leaves the client’s device!

This is crucially important as users are increasingly concerned about protecting their sensitive information, especially in the wake of massive data scandals and security breaches such as Cambridge Analytica. With TensorFlow.js, users can take advantage of AI without sending their personal data over a network and sharing it with a third party. This makes it easier to build secure applications that satisfy data security regulations, e.g. healthcare apps that tap into wearable medical sensors. It also lets you build AI browser extensions for enhanced or adaptive user experiences while keeping user behaviour inherently private. Visit this repository for an example of a Google Chrome extension that uses TensorFlow.js to provide in-browser image recognition.

(Top) The conventional ML architecture involves a diverse tech stack requiring coordination and communication between multiple services. (Bottom) TensorFlow.js has the potential to considerably simplify the architecture and tech stack. Everything is run within the client browser using JavaScript.

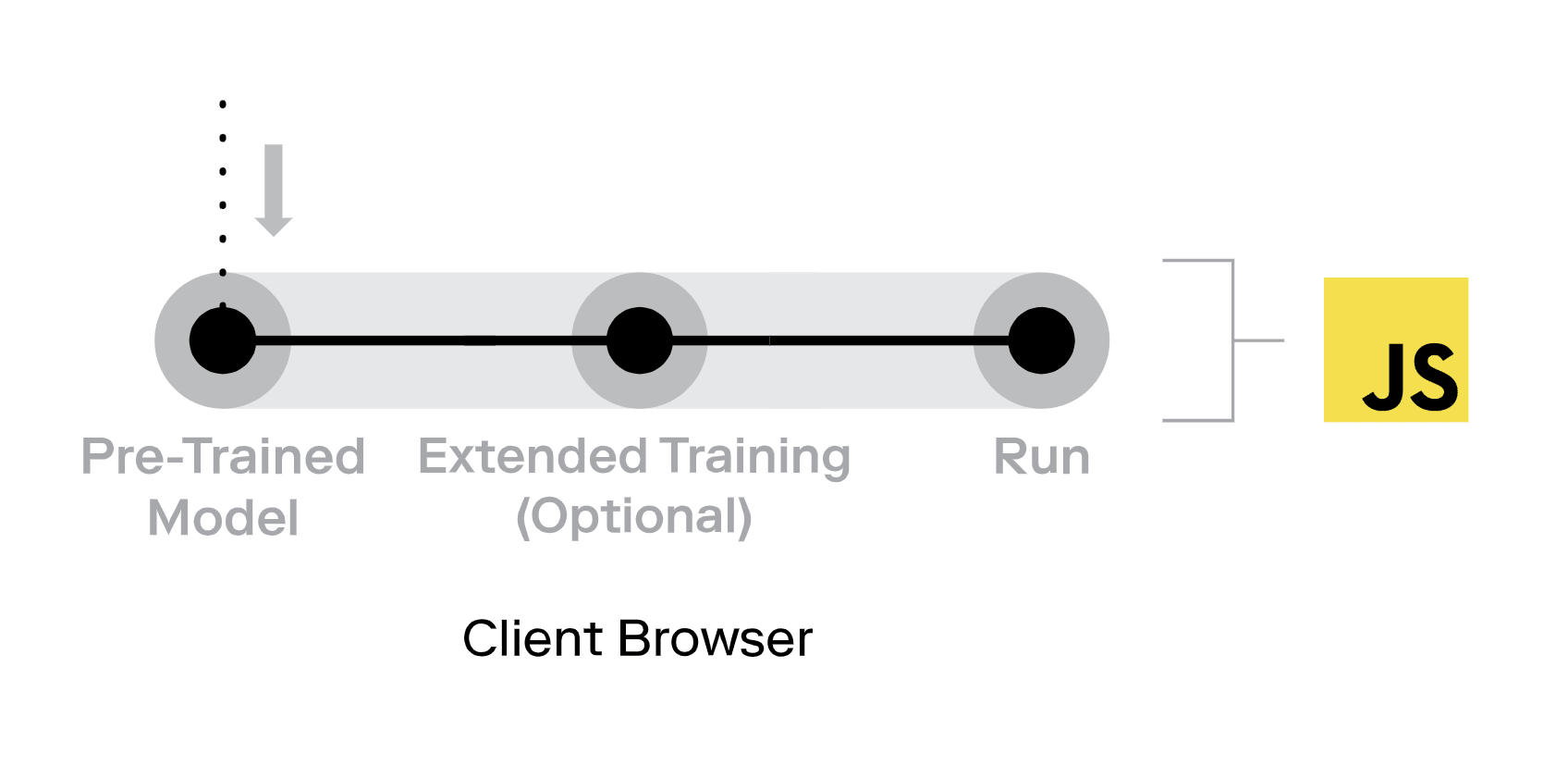

The TensorFlow.js architecture can be simplified further if a pre-trained model is available. In this case the client downloads the model and skips the training entirely. If further specialization of the model is necessary, considerable effort and training time can be saved by using extended training (transfer learning) on a pre-trained model rather than training from scratch.

Easier access to rich sensor data

Direct JavaScript integration makes it easy to connect your model to device inputs such as microphones or webcams. Since the same browser code runs on mobile devices, you can also make use of accelerometer, GPS, and gyroscope data. Training on mobile remains challenging due to hardware limitations as of early 2019. On-device training will become easier over the next few years as mobile processor manufacturers begin to integrate AI-optimized compute capabilities into their product lines.

Highly interactive and adaptive experiences

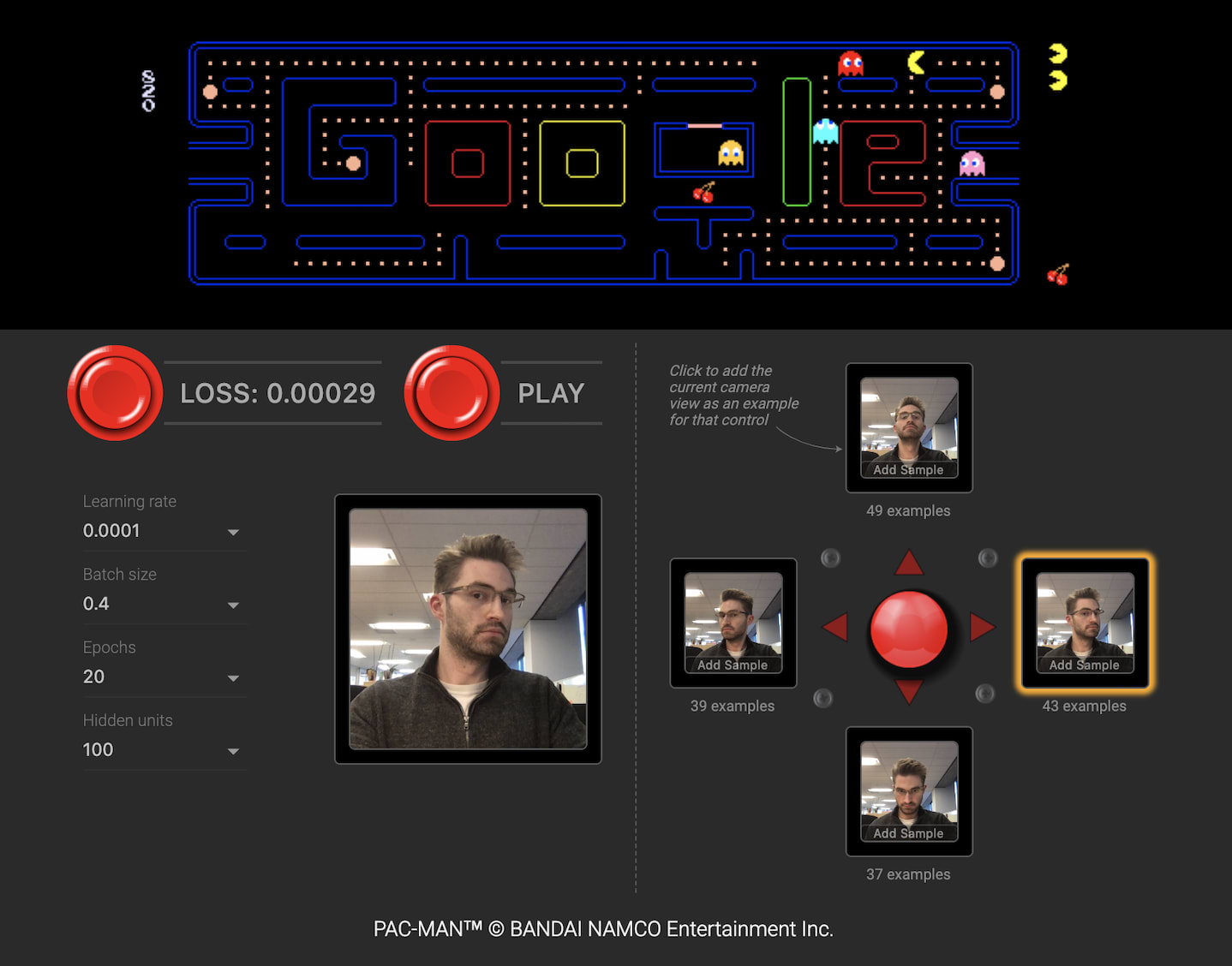

Real-time inferencing on the client side lets you make apps that respond immediately to user inputs such as webcam gestures. For example, Google released a webcam game that allows the user to play Pacman by moving their head (try it here). The model is trained to associate the user’s head movements with specific keyboard controls. Apps can also detect and react to human emotions, providing new opportunities to surprise and delight. Pre-trained models can be loaded and then fine-tuned within the browser using transfer learning to tailor them to specific users.

An image from Google’s WebCam Pacman game, where your head becomes the controller!"

A demo of the pre-trained PoseNet model in action, which performs real-time estimation of key body point positions.

TensorFlow.js also has the following helpful perks:

Use of pre-trained models

Tackle sophisticated tasks quickly by loading powerful pre-built models into the browser. No need to reinvent the wheel or start from scratch. Select from a growing number available models (at this link), or even create JavaScript ports of TensorFlow Python models using this handy TensorFlow convertor tool. TensorFlow.js also supports extended learning, letting you retrain these models on sensor data and tailor them to your specific application through transfer learning. There is huge value in building on top of pre-trained models, which have often been trained on enormous datasets using compute resources typically beyond the reach of most individuals, let alone the browser. Let others do the heavy lifting, while you focus on the lighter training task of fine-tuning these models to your user’s needs.

Hardware Acceleration for all GPUs

TensorFlow.js leverages onboard GPU devices to speed up model training and inference, thanks to its utilization of WebGL. You don’t need an NVIDIA GPU with CUDA installed to train a model. That’s right -- you can finally train deep learning models on AMD GPUs too!

Unified backend and frontend codebase

TensorFlow.js also runs on Node.js with CUDA support. In this sense, it extends JavaScript’s traditional benefit of “writing once, running on both client and server” to the realm of artificial intelligence.

Requirements

A couple of notes on what to consider before going full TFJS. TFJS can use significantly more resources than your typical JS applications, especially when image or video data are involved. For example, the Webcam Pacman app uses approximately 100 MB of in-browser resources and 200 MB of GPU RAM when running in Chrome v71 on MacBook pro with Mojave v10.14.2. *(2.2 GHz Intel Core i7, 16 GB 2400 MHz DDR4, Radeon Pro 555x 4096 Mb, Intel UHD Graphics 630 1536 MB).

Best performance requires browsers with WebGL support plus GPU support. You can check whether WebGL is supported by visiting this link. Even with WebGL, GPU support may not be available on older devices due to the additional requirement that users have up-to-date video drivers.

The requirements for modern hardware and updated software to achieve optimal performance can cause user experience to vary substantially across devices. Therefore extensive performance testing on a wide variety of devices, especially those on the lower end, should be done before distributing a TFJS app.

There are a couple of strategies to deal with potential performance limitations. Apps can check for available compute capabilities and choose a model size accordingly, with a corresponding tradeoff in accuracy. A 5-10 x reduction in memory requirements can often be achieved by reducing the model parameters without sacrificing more than 20% in accuracy. Reducing the model size will also help save bandwidth in scenarios where a pre-trained model is transferred to the client’s device. Good memory management can help keep the footprint of the app low by avoiding memory leaks (e.g. with TFJS’s tf.tidy()).

Finally, nothing can beat a good understanding of the data and problem domain. Many problems in image recognition, for example, can be solved by using images of surprisingly poor resolution. Some problems can also be simplified by using conventional algorithms with lower resource requirements or by guiding the user to do part of the work, e.g. by centering a to-be detected object.

Examples

Sounds great! So how do we get started? Visit Part II of this series to learn how to build your very own TFJS applications!

Here are some more fun examples to get your creative juices flowing!

- Let’s Dance! A holiday-themed interactive animation created by the Rangle.io team for December 2018.

- Emoji Scavenger Hunt by Google. A fun game where you are challenged to seek out and photograph objects that look like emojis, under a time limit!

- Teachable Machine by Google, where you can train a neural network to recognize specific gestures and use these to control an output image or sound.

- Rock Paper Scissors by Reiichiro Nakano, a fun twist on the classic schoolyard game.

- Emotion Extractor by Brendan Sudol, which recognizes emotion from facial expressions in uploaded images, and tags them with the appropriate emoji.

Brendan Sudol’s Emotion Extractor. Source: https://brendansudol.com/faces/.

In addition to games and apps, TensorFlow.js can be used to build interactive teaching tools for conveying key concepts or novel methods in machine learning and deep learning:

- GAN Lab: an interactive playground for General Adversarial Networks (GANs) in the browser.

- GAN Showcase: a neat demo by Yingtao Tian of a Generative Adversarial Network that ‘dreams’ faces and morphs between them.

- tSNE for the Web: an in-browser demo of the tSNE algorithm for high-dimensional data analysis.

- Neural Network Playground: while not technically TFJS, it was built from code that eventually became TFJS, and can be considered the browser app that started it all!

To see even more amazing projects built in TensorFlow.js, check out this showcase!

Click here to learn more about Artificial Intelligence at Rangle.