Confidently scale LLM in production

For businesses integrating generative AI, evaluating performance is key. Regularly assessing how well these models meet user needs and business objectives ensures that the AI continues to add value and drive innovation without regressions.

How we can help

The lack of evaluations has been a key challenge for deploying AI models to production. By treating model evaluations as unit tests for LLM and pairing prompting with evaluations, our experts can transform ambiguous dialogues into quantifiable experiments, making AI a more manageable software product.

Create human-graded evaluation suites

Create model-graded evaluation suites

Govern and upgrade models

Our team develops comprehensive human-graded evaluation suites to assess the performance of your LLM in various scenarios – including bad output formatting, inaccurate responses/actions, going off the rails, bad tone and hallucinations – ensuring that it meets user needs and business objectives effectively.

We also design model-graded evaluation suites, enabling you to automatically evaluate and monitor your LLM's performance at scale. By reducing human involvement in parts of the evaluation process that can be handled by LLMs, human testers can be more focused on addressing some of the complex edge cases that are needed for refining the evaluation methods.

To maintain high-quality performance and prevent regressions, we provide ongoing model governance and upgrades. Our team continuously refines evaluation suites, updates models with new data, and implements improvements based on evaluation results, ensuring your LLM remains a valuable asset that drives innovation.

Create human-graded evaluation suites

Our team develops comprehensive human-graded evaluation suites to assess the performance of your LLM in various scenarios – including bad output formatting, inaccurate responses/actions, going off the rails, bad tone and hallucinations – ensuring that it meets user needs and business objectives effectively.

Create model-graded evaluation suites

We also design model-graded evaluation suites, enabling you to automatically evaluate and monitor your LLM's performance at scale. By reducing human involvement in parts of the evaluation process that can be handled by LLMs, human testers can be more focused on addressing some of the complex edge cases that are needed for refining the evaluation methods.

Govern and upgrade models

To maintain high-quality performance and prevent regressions, we provide ongoing model governance and upgrades. Our team continuously refines evaluation suites, updates models with new data, and implements improvements based on evaluation results, ensuring your LLM remains a valuable asset that drives innovation.

Companies we've helped

AI-powered Human-in-the-loop Solution

buildco

Three weeks to launch a scalable AI-powered marketplace solution with secure governance

Using generative AI and an open-source AI governance platform, we delivered a human-in-the-loop matchmaking system for a service marketplace platform that minimized operational costs, accelerated lead response times, and scaled without increasing headcount, with oversight, traceability, and control at its core.

New Product Development & Artificial Intelligence

Babbly

An AI-enabled demo from concept to functional model in just 2 weeks

Babbly team closed a successful pre-seed investment round with machine learning PoC.

New Product Development & Artificial Intelligence

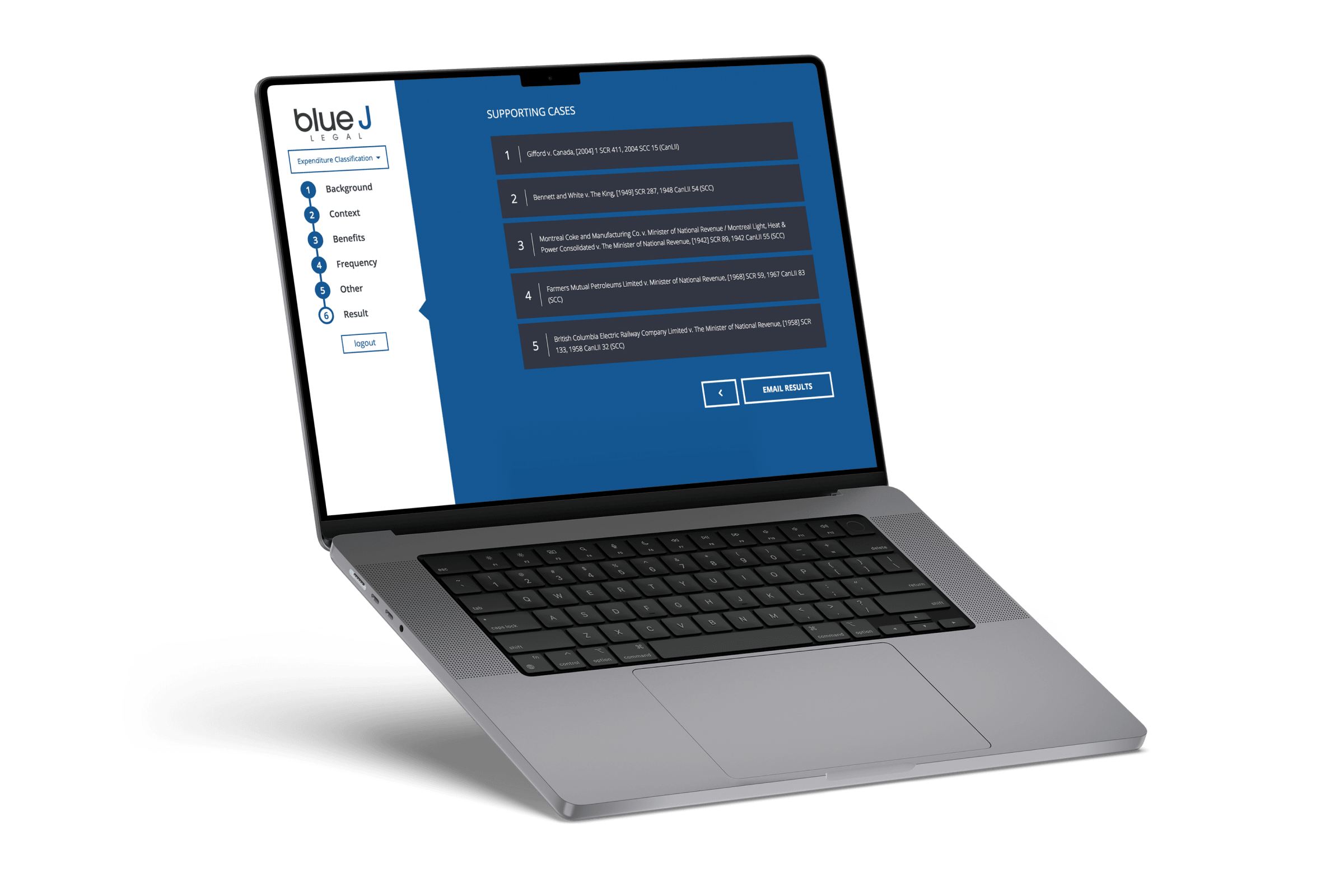

Blue J Legal

Simplifying the work of tax professionals with a mobile app

Blue J Legal successfully launched its AI-powered research tool and acquired its first paying customers during its partnership with Rangle.

Featured Posts

In this webinar, we talk about useful frameworks for companies thinking about deploying AI in their context and building products with it.

A practical guide to using AI to curate product collections at scale, make product recommendation and search algorithms smarter with semantic awareness, and empower teams to deliver seamless customer experiences.

Generate nuanced translations for your e-commerce site using AI. Learn how to set up, fine-tune, and integrate AI models seamlessly with your headless CMS for automatic content translation. Uncover practical steps for implementation, from choosing the right language model to architecting efficient translation workflows that align with your budget and goals.