Key Takeaways

- Strategic AI Vision: Understand the strategic importance of AI and its transformative impact on software development and user experience.

- AI in the Wild: How companies like Intuit and Lonely Planet build industry-leading, AI-powered customer experiences.

- AI Deployment: Learn strategies for rapid and efficient AI integration and deployment, aligning initiatives with your business objectives.

Revolutionizing AI: Exploring Foundation Models, LLMs, and Generative AI

First, a brief overview of some terms:

- Foundation models are AI systems with broad capabilities, adaptable for various tasks. Unlike single-task AI, these models offer a versatile base due to training on extensive datasets covering diverse information, enabling them to perform multiple tasks and edge closer to artificial general intelligence.

- Large Language Models (LLMs) are a type of foundation model designed for processing and generating human language. They are trained on billions, sometimes trillions, of parameters using extensive textual data, enabling nuanced and complex language comprehension and generation. Examples include OpenAI's GPT series and Google's PaLM, adept at tasks like translation and content creation.

- Generative AI refers to any AI system designed to create content like images, text, or music, using advanced algorithms and training from diverse datasets. Its capability to generate creative and relevant content has applications in both the arts and practical domains, including automated business content generation and personalized media creation.

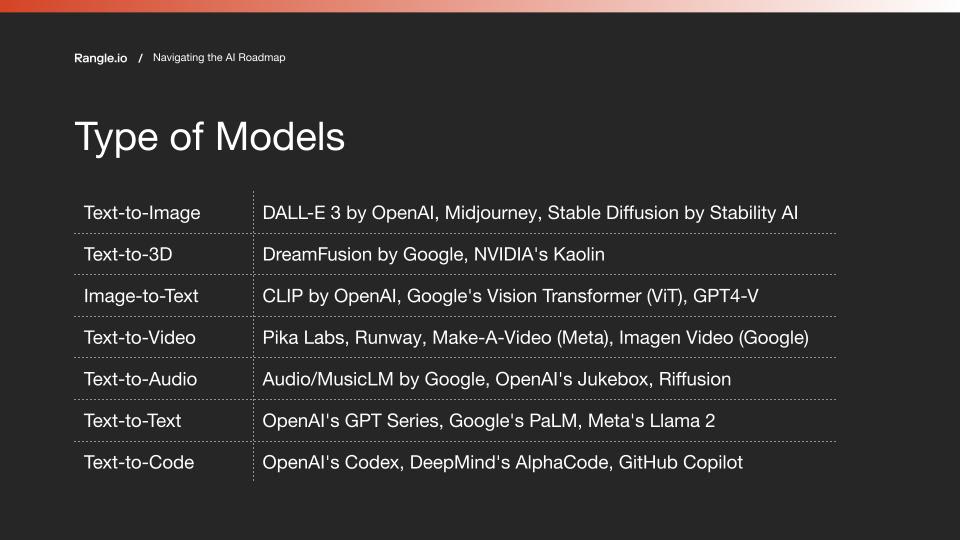

Exploring Different Types of AI Models: how to choose?

There are a variety of AI models, each tailored to specific modalities and functions. Each of these models, outlined in the following table, excels in transforming input from one format to another, showcasing the multifaceted capabilities of modern AI systems:

When considering which model to use, it's useful to think about the specific task you need to achieve. Typically, implementing AI in an app involves using pre-built models rather than creating your own foundation model, which can be prohibitively expensive. Developing a model is usually a research-intensive task reserved for large companies, as it can cost hundreds of millions to billions of dollars.

However, there's an alternative in the open-source community. For example, Meta’s Llama 2 is comparable to GPT-3.5 but smaller and has been adapted by the community into various specialized models. These open-source models, while not as powerful, can be more suited to specific tasks and offer greater control and customization.

When deciding between proprietary models and open-source options, consider the trade-offs in terms of not only effort and resources, but also your strategy and environmental constraints. In regulated industries like healthcare, using models like Llama 2 can reassure regulators about information access and usage control.

AI in the Wild

One of the key challenges facing companies that want to start implementing AI is deciding what kind of value to provide users. Below are examples of what some companies are doing, how they’re thinking about it, how you may adapt it, and some of the patterns in the UX/UI.

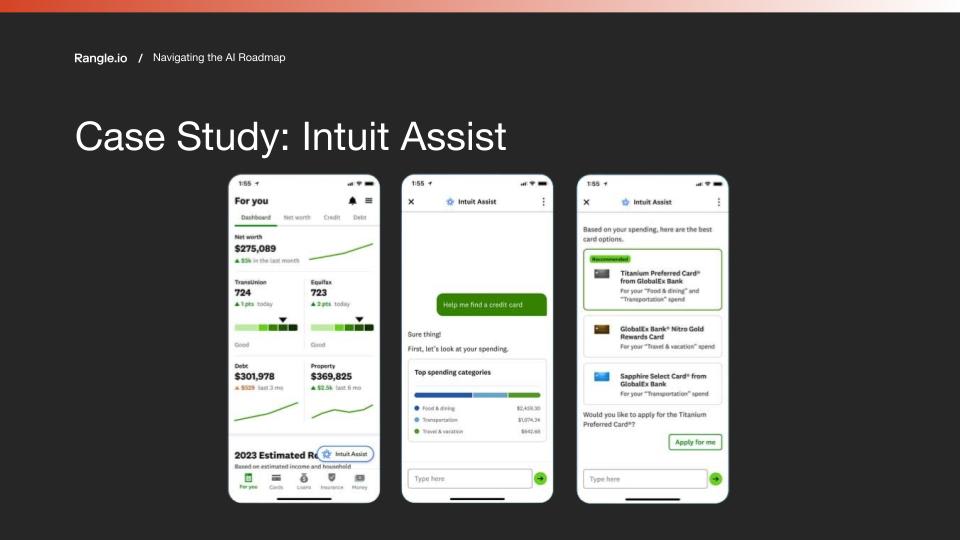

Intuit Assist

Pattern: We have access to your data; why not talk to it?

Intuit Assist, developed by Intuit, is an AI-driven chat interface that offers users access to financial data through conversational interaction. This innovation moves away from traditional graph-based data analysis, enabling more natural and intuitive user engagement. It demonstrates how AI can transform user experience in the financial sector by enhancing interaction and value.

Trill by Lonely Planet

Pattern: Actionable experiences by extrapolating and generalizing from fewer inputs, be it from text or image or an idea

Lonely Planet's Trill feature converts social media images, such as influencers' Instagram posts, into "bookable content," allowing users to easily book similar travel experiences. Trill identifies specific details like neighborhoods and suggests activities, enhancing user experience through seamless booking integration.

Canva

Pattern: Simplifying complex tasks by describing what you want in a conversational manner

Canva has integrated AI to make design more accessible and intuitive, especially for users with limited design skills. Users can describe their design needs conversationally, similar to talking with a graphics department, aligning with Canva's goal to make design accessible and user-friendly.

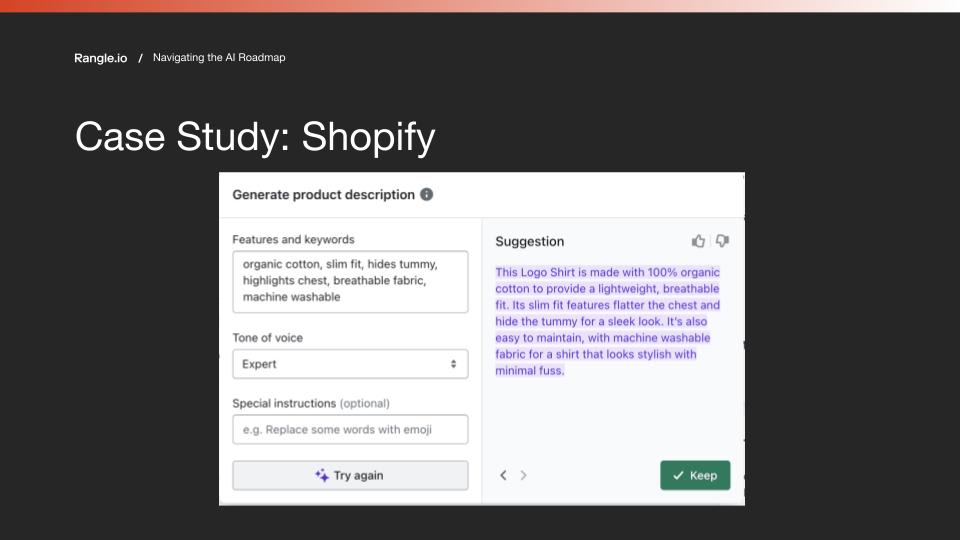

Shopify

Pattern: Anticipating what you want, offering intuitive suggestions, and providing multiple permutations

Shopify, an e-commerce platform, uses AI to help users write product descriptions and tags. AI features provide intuitive suggestions, streamlining navigation and enhancing user experience and efficiency, showing AI's ability to simplify online business operations and improve digital interactions.

Tavily

Pattern: AI agents offering proactive assistance

Tavily is an AI-driven agent that autonomously performs tasks and offers proactive assistance. It can research and summarize complex queries, like whether to invest in certain stocks, working asynchronously to inform users upon finding solutions. Tavily also proactively monitors and alerts users about developments relevant to their professions and interests, representing a significant shift in personalized and proactive human-AI interaction.

Deploying AI in your Company

There are 3 things to consider when you’re thinking about deploying AI in your company.

- User experience: How to ensure effective and satisfying AI interactions?

- Model consistency: What do you have to do to launch your model properly at scale?

- Evaluating performance: Is your model achieving its outcome, and providing good and safe answers?

User Experience: Control for Uncertainty

Keep human-in-the-loop (for the 1st version)

During the early stages of AI deployment, especially in user experience design, it's important to keep a 'human-in-the-loop' approach. At Rangle, this is key to helping our clients ship in weeks, not months or years.

This means involving human oversight and decision-making in AI applications to ensure they are reliable, safe, and appropriate for the context. This practice not only improves AI performance but also helps identify and fix any unexpected problems, biases, or errors.

Manage expectations (AI notice)

Clearly communicating AI's capabilities and limitations helps align user experience with actual performance, reducing misunderstandings and frustrations. Transparent communication about AI's nature and its limits builds trust and improves user satisfaction.

Guide the user (provide suggested prompts)

Providing suggested prompts helps users clearly express their needs, streamlining interactions and reducing ambiguity, especially in complex or specialized domains. Useful in B2B and customer service settings, this approach leads to more precise AI responses and enhances user satisfaction.

Model Consistency: Ensuring AI Model Reliability

JSON Mode

The JSON mode refers to a structured approach in AI response generation. It directs AI to output data in a predefined format like JSON, containing specific fields relevant to user needs. This ensures concise, relevant information and avoids irrelevant text, enhancing response consistency and relevancy.

Ground the model

Grounding AI models involves connecting them to specific, reliable data sources, ensuring responses are relevant and accurate for a particular enterprise. This process, essential for foundation models like LLMs, uses resources like internal databases, CMSes, or APIs, making AI responses not just generally knowledgeable but also tailored to specific business needs.

Retrieval-Augmented Generation (RAG) is an architectural pattern that we’re seeing more and more around the concept of grounding your data, i.e. retrieving data at request (not inference) to augment generation of the text.

Evaluating Performance: Key Strategies for Testing and Improving Models

We see people miss the testing step; however, creating an evaluation suite is critical to achieving any speed with your model, especially for iteration.

User-graded evaluation suites

User-graded evaluation suites, where humans assess AI model responses to preset prompts, are crucial for measuring AI performance. They involve grading responses on a scale or as good/bad to establish a performance baseline, which can be monitored and improved over time. Regular use and assessment of these suites are integral for understanding how well the AI model performs and aligns with expected outcomes. However, this method is limited in scalability due to the extensive human involvement required.

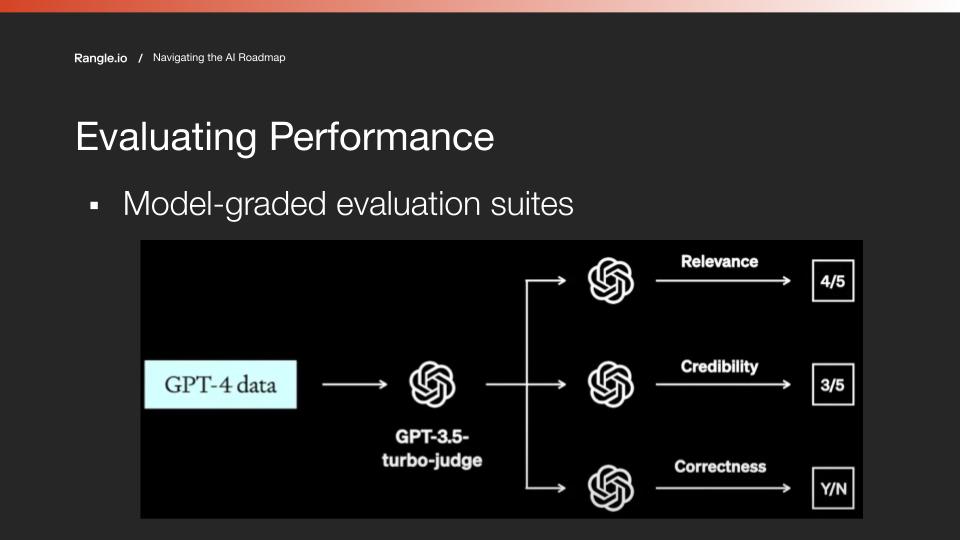

Model-graded evaluation suites

Model-graded evaluation suites are a scalable and efficient method for AI performance evaluation, where an AI model assesses another model's responses. This best practice allows broader evaluation than human-graded methods and is crucial for iterative AI development and maintaining quality and effectiveness in more complex and evolving AI systems.

As these tools improve, it's crucial to periodically reassess the AI models you use. For example, if you've been using a model like GPT-3.5-turbo-judge for six months, it may become outdated in terms of cost-effectiveness and quality. To ensure you're getting the best quality at the most reasonable cost, stay up to date with the rapidly changing AI market and regularly evaluate your choice of models.

Final Thoughts

Many people may be feeling anxious about AI models and our ability to control outputs and avoid inaccuracies in AI-generated text. However, as these models improve, our capacity to deliver safe and on-brand content is steadily getting better.

Several companies are already providing industry-leading, AI-powered customer experiences. If you or your company are also considering whether to implement AI, take comfort in knowing that it doesn’t necessarily need to be cost-prohibitive. There are many pre-built models, both private and open-source, to choose from, depending on the specific task you’re looking to accomplish.

When you finally get to the deployment stage, it’s imperative to control for uncertainty in user experience by managing expectations and providing AI notices. It’s also important to ensure reliability of your AI model by directing it to provide output in a pre-defined format, or by grounding it in specific, reliable data sources to ensure relevant and accurate responses.