Software testing is expensive. Did you know it can amount to as much as 25% of total project costs?

Motivation

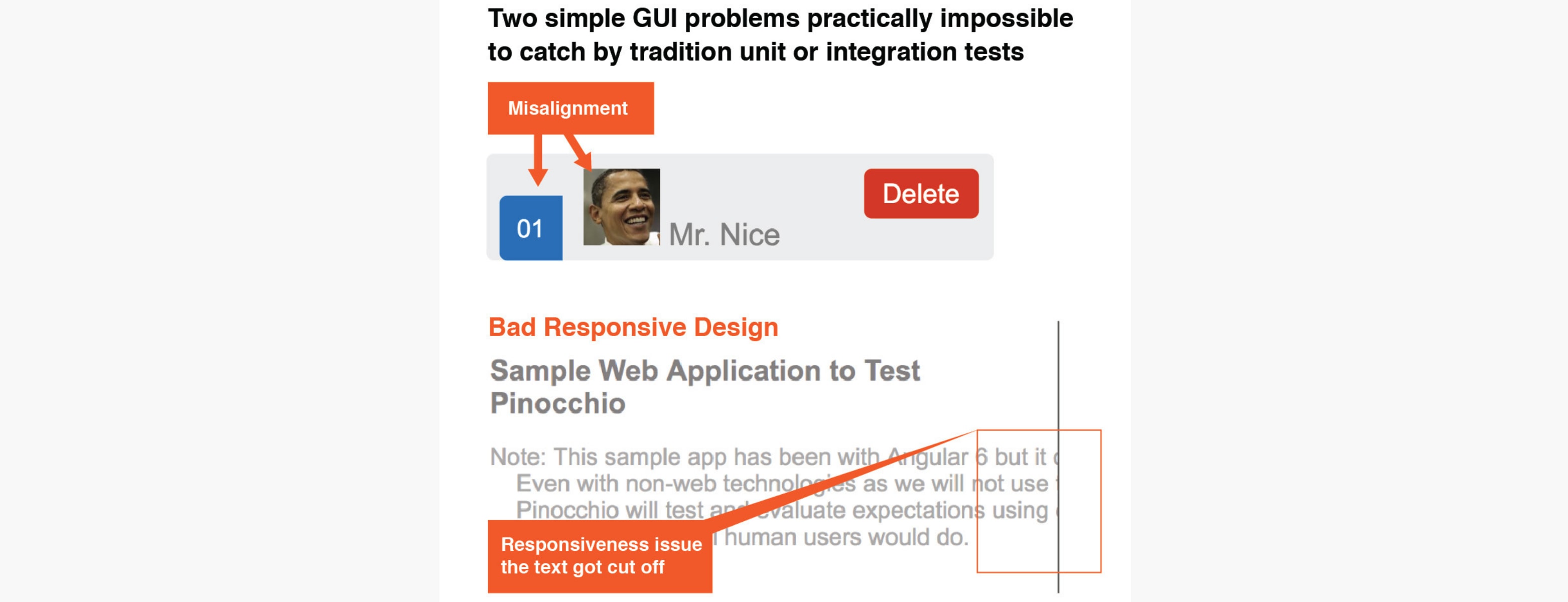

Although unit and automated integration testing have helped to reduce the amount of manual work required to run tests, there's still plenty of manual effort needed. Tests need to be written and maintained, failed tests need to be interpreted, and the code needs to be corrected accordingly. In addition, current automated test strategies aren't able to catch many of the user interface problems that a human would, such as cut-off elements, misalignment, and problems with responsiveness (see Figure 1). Testing user interfaces is a big challenge for traditional automated tests that operate on the level of code rather than on the fully rendered output.

To counter this we propose to execute and validate tests through the eyes of the user by looking at the screen as opposed to the code. Computer vision can be used to detect visual changes. But what are the rules to filter out all the inconsequential changes, for example, when the window size is changed responsively? We believe that some of the above software testing challenges can be overcome by machine learning (ML).

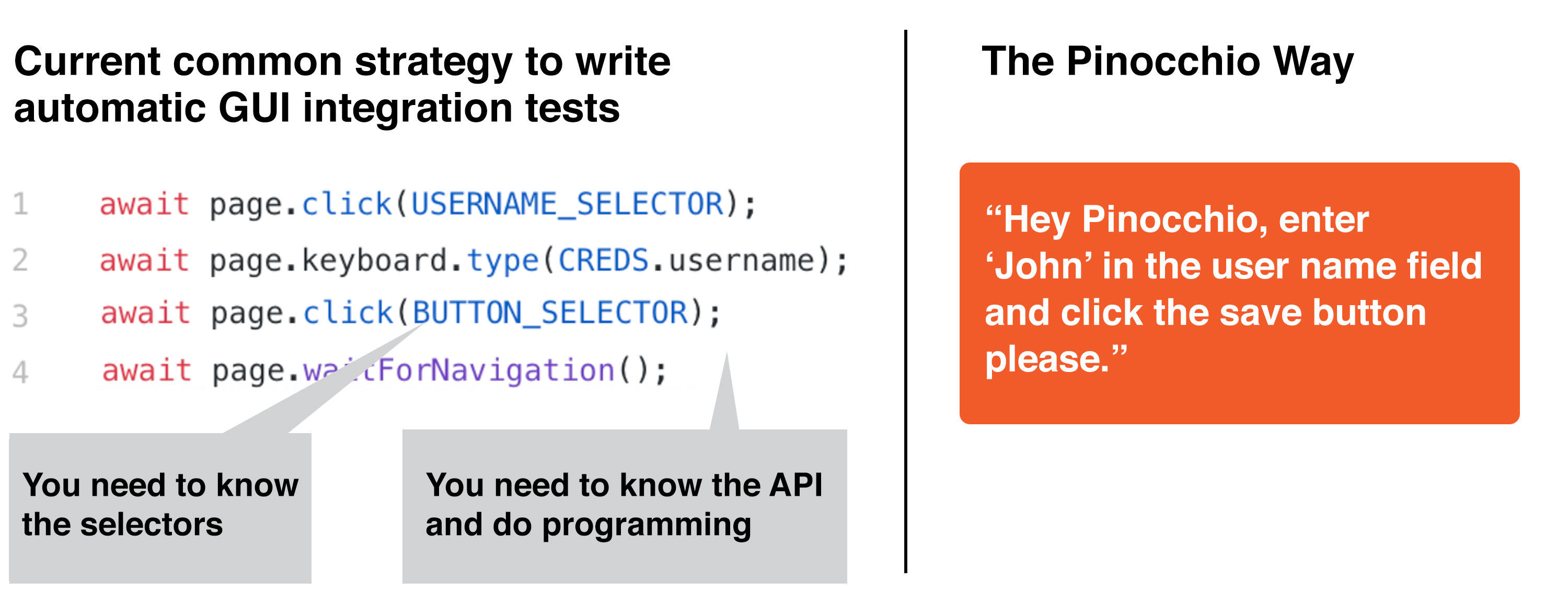

Machine learning models can be trained with examples and therefore don't require a pre-specified set of rules. In addition, machine learning could help to reduce how much work and expertise is needed to write a test, even with modern tools. For example, to test entering data in a form field it is necessary to know the 'selector' to find the objects in the HTML page. Using state-of-the-art computer vision and natural language processing techniques it should be possible to specify tests using natural language. As an example, "type username 'John' and click the 'Save' button."

Machine learning also has the ability to play a crucial role in helping to maintain tests. When code and test go out of sync, tests that are no longer relevant will fail. Machine learning can help to identify these outdated tests automatically and remove them.

Lastly, a promising opportunity is the ability to predict which part of the code was to blame for the failure. Although it seems far-fetched, the reality is that if we collect and use all the available information about code changes and failures, we will know statistically that certain parts of the code are more problematic than others.

Quick Recap

We've identified at least four key areas where we believe machine learning will be able to add value by automating the manual QA process:

- Execute and validate tests through the eyes of the user to catch UI-related problems.

- Write tests in natural language through the eyes of the QA person.

- Identify outdated tests to speed up issues found during regression.

- Locate the code that caused the test to fail to to speed up the fix.

Further Reading

According to the Forrester Wave research report on Omnichannel Functional Test Automation Tools, Q3 2018 this trend is being pushed by leading test automation tool vendors. Artificial intelligence (AI) and machine learning will help bolster new technologies like natural language understanding, text understanding, voice, video and image recognition, super-efficient pattern matching, and the ability to make predictions. We surveyed the ML/AI industry and found a number of forward-thinking initiatives: Eggplant.io, Test.ai, Retest, Sofy.ai, Applitools, mabl and Functionize.

Out of those we'd like to highlight two in particular: Applitools Eyes and Test.ai. Applitools Eye allows you to easily add visual checkpoints to your JavaScript Selenium tests and grabs screenshots of your application from the underlying WebDriver. It then sends them to the Eyes server for validation and fails the test in case differences are found. Test.ai, created by Jason Arbon, co-author of How Google Tests Software, creates tests in a simple format similar to the BDD syntax of Cucumber, so little or no coding is required. The AI identifies screens and elements dynamically in any app and automatically drives your application to execute test cases.

Although commercial initiatives in the software testing space are rapidly trying to adopt ML, the only open source project we found was Sikuli. This initiative is still very experimental and confined to the academic community which is why we decided to create this initiative.

Conclusion: The Pinocchio Project

The Pinocchio Project is an open source initiative to bring machine learning to software testing. All code, documents, and other artifacts will be kept in GitHub. This is the first post in a series that explains the motivation, short-term goals, and first steps of the project. Stay tuned for our next post that will dig into detailed solutions for specific goals!

This post originated from a video for a final project for a machine learning course at U of T. Click here to learn more.