Machine learning and artificial intelligence (AI) can be daunting subjects.

You might've heard about neural networks, generative adversarial networks (GANs), and recurrent neural networks (RNNs) but have no clue what people are talking about.

It can seem like there's a high barrier to entry.

But in fact there isn't.

You can get started with AI today!

And I'm not just talking about following a video tutorial and building a sample project.

You can start using AI to enrich your users' experience right now.

In this article we'll cover:

- Differences between AI and traditional software development

- Frontend AI applications

- How you can get started with AI today

If this sounds appealing read on to find out more.

Check out Rangle's Front End Hub. New content weekly!

How is AI different from traditional software development?

With traditional software development you code decision logic by hand giving you systems like this:

if (someCondition === someCriterion) {

doAThing();

} else {

doAnotherThing();

}

AI differs from traditional, rules-based software development in that you don't need to hand craft the decision logic.

AI systems learn by example and figure out the decision logic by themselves.

Imagine you're the CTO for a photo-sharing platform.

How do you prevent users from uploading images containing explicit content?

Sure you could employ a person to check every photo by hand but that's not a scalable solution (not to mention expensive and unfulfilling for the individual doing the job!).

How about creating an automated solution to check the photos?

Sounds appealing, right?

But imagine trying to write rules and decision logic to decide if an image contains explicit content.

Using our template from above a simple system might look like this:

if (containsExplicitContent(newPhoto)) {

rejectPhoto();

} else {

acceptPhoto();

}

The difficulty lies in defining the containsExplicitContent function, i.e. how we’ll check whether the content is explicit or not.

But how can you possibly take into account all the different types of explicit content and the minute variations of each?

It's an impossible task.

In this regard, rules-based software development is fragile, it cannot handle unexpected change.

Therefore you need to know all possible permutations of explicit content in order to write software that will detect it.

Imagine going through every single explicit image on the internet and trying to figure out a set of rules that you could write to flag them but not flag other images.

And that wouldn't even give you a good enough solution because you'd need to know all the possible images that haven't been taken yet (if you want a system that's 100% accurate).

And how to test this system?

It would take a very long time and a lot of effort to build something like this.

It makes my brain hurt.

D. P. Gumby can’t handle AI but YOU can.

Enter AI.

By showing an AI system a few examples of the types of images you want to ban from your platform you can train the system to identify similar images.

This means the development of an AI system will be much faster than a rules-based one for the application at hand.

The AI won't be 100% accurate but it'll be a LOT faster and cheaper than checking the images by hand, especially when used at scale.

Even with 90% accuracy you could create supporting systems whereby borderline explicit images could be flagged and checked by a team of real people who approve or deny the upload.

And these cases can then be used to further train the system and improve its accuracy.

This is called “augmentation” as opposed to complete “automation”.

In summary, AI can help us where the rules are not clear and decision logic can't be written by hand.

That's all well and good, but what does it have to do with the frontend?

How can AI help frontend devs?

AI can help frontend devs to improve the UX of their product.

The most talked about AI projects are game-changers:

- Tesla's Autopilot.

- DeepMind’s AlphaGo.

- IBM’s Watson.

But you don't need to build a self-driving car or a machine that can beat human’s at strategy games.

You don't have to use AI to change the world.

You can start small and use AI to delight your users.

In fact, there are some AI APIs already available that you can integrate into your apps today:

I won't preach to you, I'll show you how AI can help.

Automatically add tags to images

Personally I hate menial tasks like adding tags to images.

And I’m sure users of image-sharing platforms hate this too.

What if I told you AI can do it for you?

Neat, right?

Well, Google’s Vision API can do just this.

You can submit any image to the API and, using pre-trained models, it’ll detect objects in the image and provide you with a list.

AI photo label generation is no joke.

You don’t need to train a model to label images, Google has already done that for you.

Imagine the delight on your users’ faces when their uploaded images already have tags.

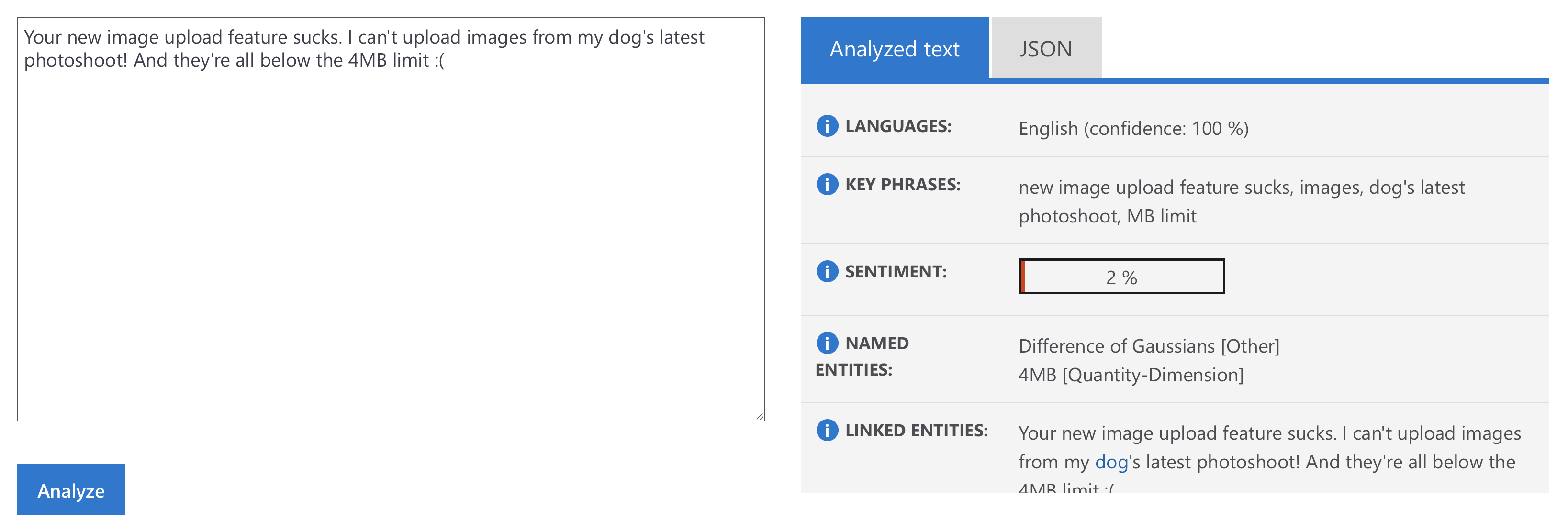

Service desk sentiment tagging

Oh the good old service desk.

Don’t you love dealing with angry users?

How about this for an idea: rank service desk tickets by sentiment and give your angriest users to your most experienced service desk reps.

Microsoft Azure Text Analytics API can help you identify sentiment in text and get you some of the way to determining how upset a user is.

The API gives you a sentiment score (a low number means negative sentiment, and a high number means positive sentiment) that you could use that to prioritise the ticket or give it to an experienced team member.

Just another example of how easy it is to get the AI ball rolling.

Visual search

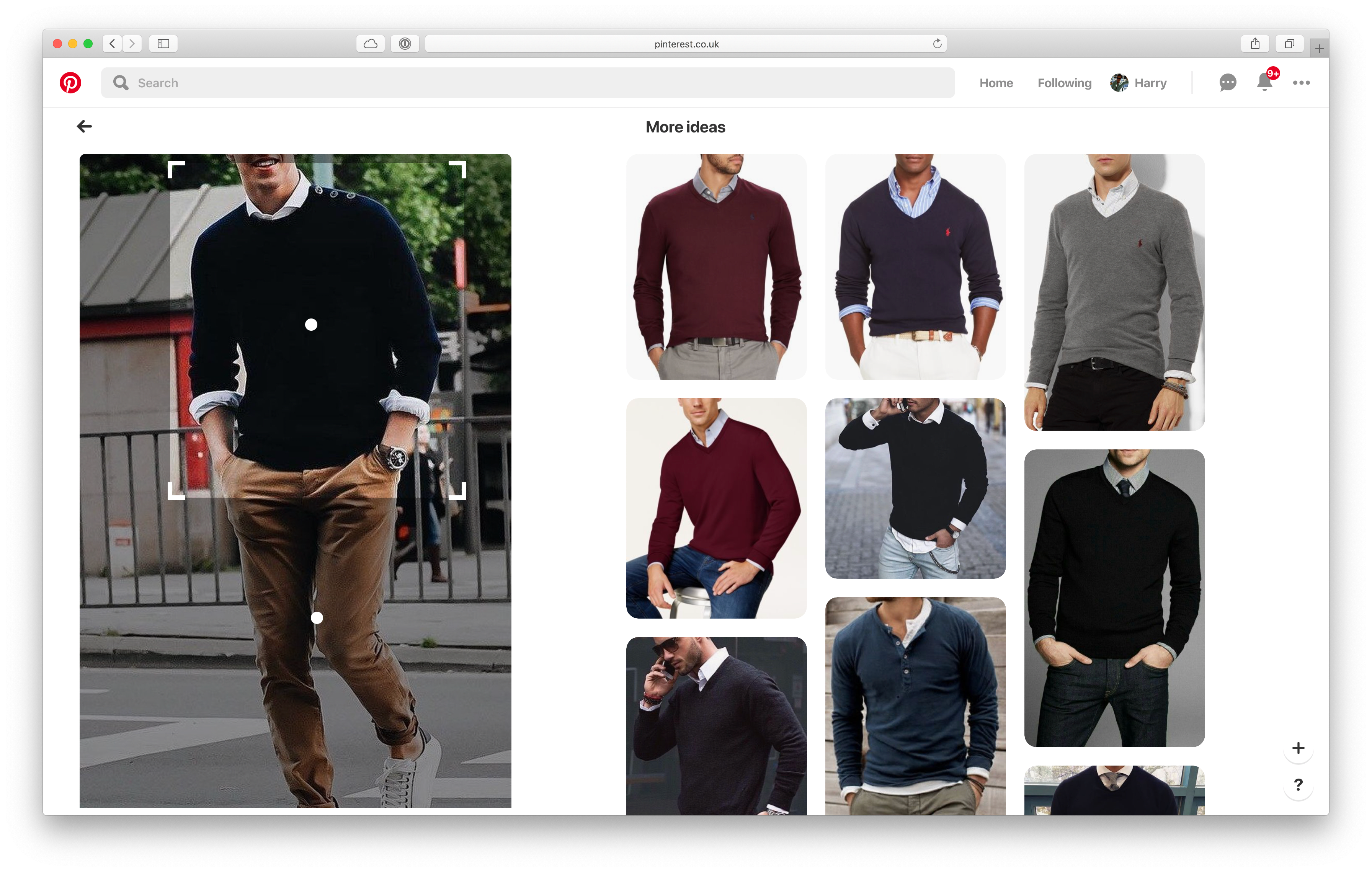

Pinterest has a visual search feature that allows users to search for pins that look similar.

Pinterest’s visual search feature finds sweaters similar to the one highlighted in the left image.

Imagine if you could allow your customers to search for items using a photo instead of text.

Users could upload a photo and search your product database for similar items.

Sounds complicated?

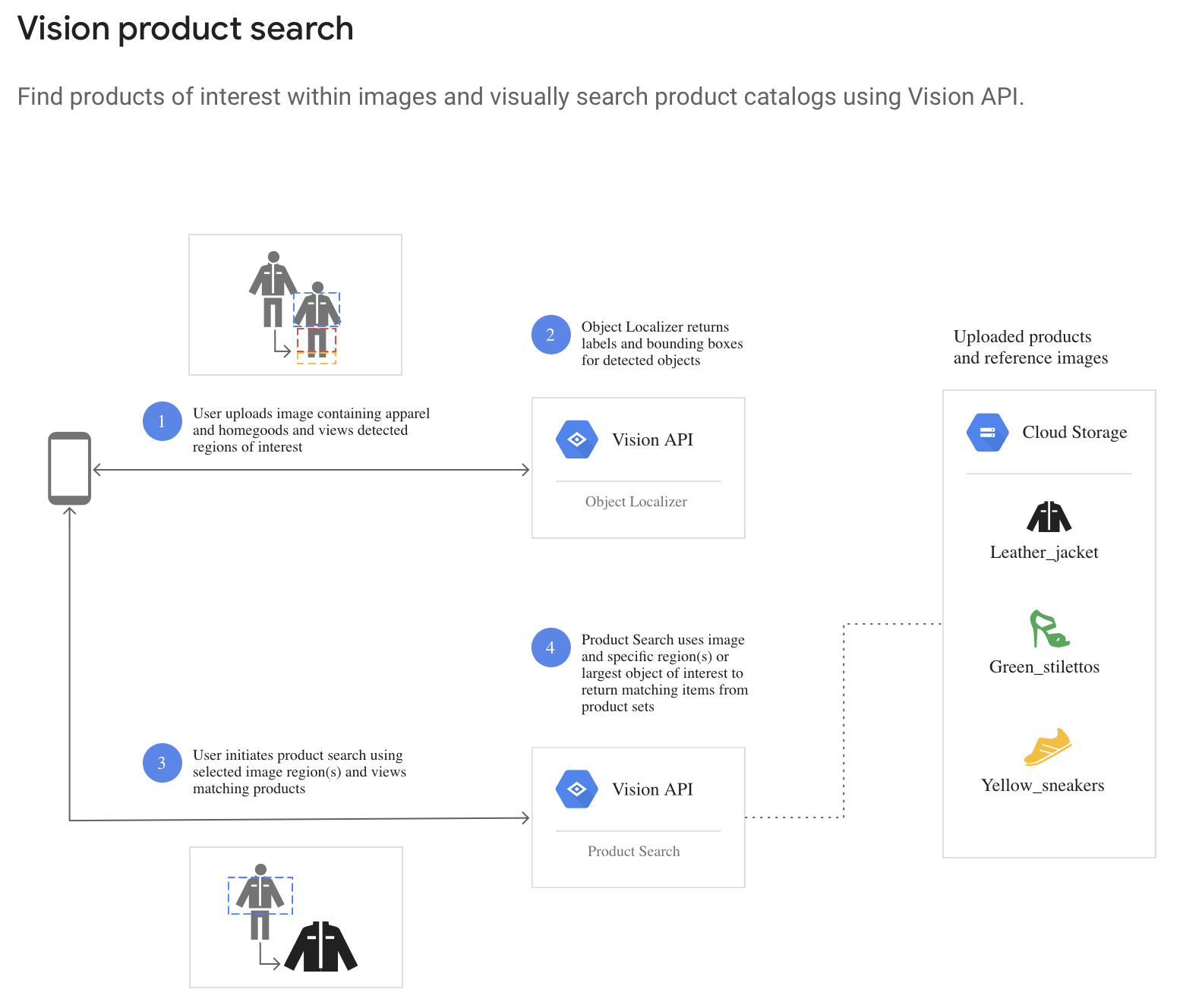

Surprise, surprise, you don't have to be an AI expert to implement something like this, you can use Google’s Vision API again.

Here’s a blueprint from their website:

Google already sketched out a visual search workflow for you.

How cool is that?!

How can frontend devs get started?

All of the examples above use APIs so if you can use an API, you can do AI.

To show you just how easy it is I’ve put together a demo app that uses Microsoft Azure’s Face API.

The app replicates part of a sign up process that involves verifying your identity and is aptly named, Verïfi.

Users must provide a photo of government-issued ID and a clear photo of their face, then someone at the company will use the photos to verify the user’s identity.

What can happen though is that the photo of the user’s face is not good enough quality to allow their identity to be verified.

And the company won’t know this until someone tries to verify the user’s ID, which might be a few hours or even a day after the user has uploaded their photos.

At this point the user will typically get an email telling them that the company could not verify their identity and they must submit a better quality photo.

Now if I’m a user who gets that email I’m much less interested in trying to sign up for these services. I don’t want to go through the sign up process again and take new photos.

I can’t be bothered.

And many users who fail the verification process will feel the same, causing the company to lose users due to their poor UX.

We can improve this with AI.

How?

By giving users feedback on their photo in real-time.

The demo

Check this out:

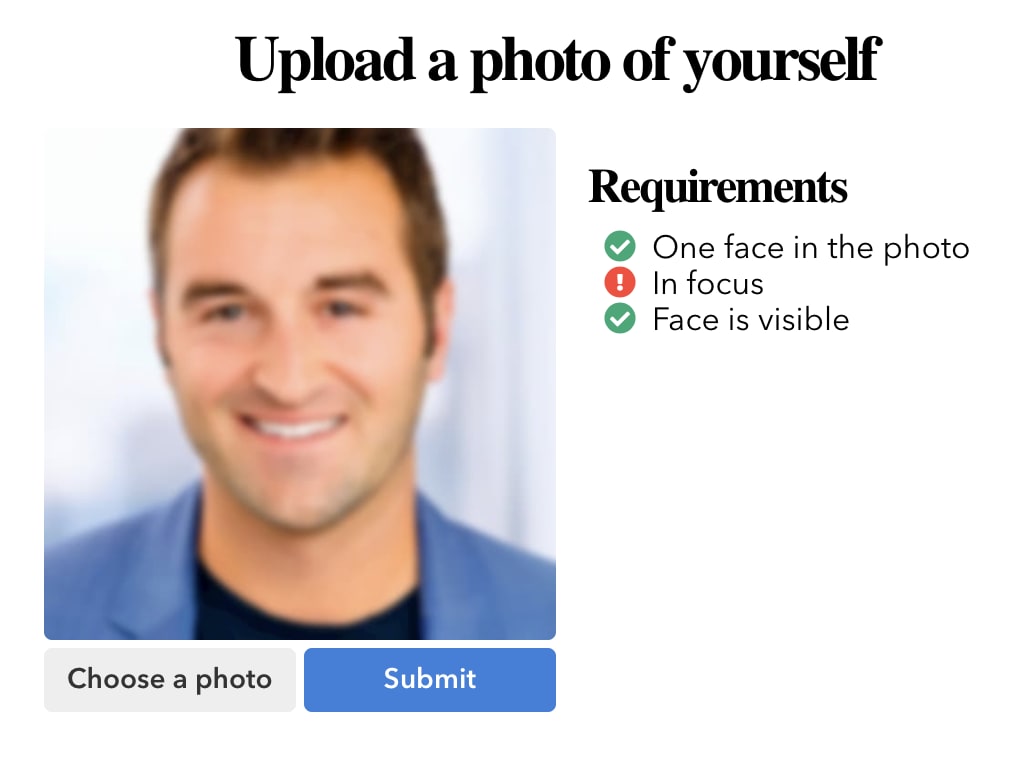

We just have three simple requirements here:

- Only one face in the photo

- Face must be in focus

- Face must be visible

When I upload this blurry image the API quickly tells us that there is in fact one face in the photo but it’s not in focus.

Using this information we can alert the user and give them the opportunity to change the photo or proceed anyway.

The confirmation step is important because we can’t rely on AI.

AI is not perfect.

We should give the user a way to override the AI’s recommendation.

In Verïfi we do that by notifying the user that there might be a problem with the photo they’ve uploaded but we still give them the option to submit it anyway.

With an updated UX like this, I’d expect to see a decrease in the number of users uploading poor quality photos and an increase in user retention at this point in the sign up flow.

The code

And here’s the “magic” behind the photo quality-check:

export const request = (

photoBlob: Blob,

photoDimensions: { height: number; width: number }

) => {

const API_KEY = process.env.REACT_APP_MS_API_KEY

? process.env.REACT_APP_MS_API_KEY

: "";

const API_URL =

"https://rangleio.cognitiveservices.azure.com/face/v1.0/detect";

const API_PARAMS = {

returnFaceId: "false",

returnFaceLandmarks: "false",

returnRecognitionModel: "false",

returnFaceAttributes: "accessories,blur,exposure,glasses,noise,occlusion",

detectionModel: "detection_01",

recognitionModel: "recognition_02"

};

// Assemble the URL and query string params

const reqParams = Object.keys(API_PARAMS)

.map(key => `${key}=${API_PARAMS[key as keyof typeof API_PARAMS]}`)

.join("&");

const reqURL = `${API_URL}?${reqParams}`;

// Fetch via POST with required headers; body is the photo itself

return fetch(reqURL, {

method: "POST",

headers: {

"Content-Type": "application/octet-stream",

"Ocp-Apim-Subscription-Key": API_KEY

},

body: photoBlob

}).then(response =>

response.json().then(json => ({ json, photoDimensions }))

);

};It’s just an API call.

That’s it!

Told ya it was easy.

Send your photo to the endpoint and you’ll get a response containing a whole bunch of data.

Here’s what we care about:

[

{

"faceAttributes": {

"occlusion": {

"foreheadOccluded": false,

"eyeOccluded": false,

"mouthOccluded": false

},

"accessories": [

{"type": "headWear", "confidence": 0.99},

{"type": "glasses", "confidence": 1.0},

{"type": "mask"," confidence": 0.87}

],

"blur": {

"blurLevel": "Medium",

"value": 0.51

},

"exposure": {

"exposureLevel": "GoodExposure",

"value": 0.55

},

"noise": {

"noiseLevel": "Low",

"value": 0.12

}

}

}

]We use the blur and noise values to determine if the face is in focus or not.

occlusion and accessories tell us if the face is visible.

And the length of the outermost array tells us how many faces are in the photo.

Once we have this data we just need to define a transform function that converts the data into a format we can use in the app, i.e. boolean values telling us whether the requirements have been met or not.

Here’s the example from Verïfi:

export const transform = (response: { json: any }) => {

const { json } = response;

let requirements = {

score: 0,

errorMessage: null,

hasSingleFace: false,

isInFocus: false,

isCorrectBrightness: false,

isVisibleFace: false

};

// Capture error returned from API and abort

if (!Array.isArray(json)) {

return Object.assign({}, requirements, {

errorMessage: json.error.message

});

}

// If exactly 1 face is detected, we can evaluate its attributes in detail

if ((requirements.hasSingleFace = json.length === 1)) {

const {

faceAttributes: {

blur: { blurLevel },

noise: { noiseLevel },

exposure: { exposureLevel },

glasses,

occlusion,

accessories

}

} = json[0];

// All conditions must be true to consider a face "visible"

// Put in array to make the subsquent assignment less verbose

const visibleChecks = [

glasses === "NoGlasses",

Object.values(occlusion).every(v => v === false),

accessories.length === 0

];

requirements.isInFocus =

blurLevel.toLowerCase() === "low" && noiseLevel.toLowerCase() === "low";

requirements.isCorrectBrightness =

exposureLevel.toLowerCase() === "goodexposure" || exposureLevel.toLowerCase() === "overexposure";

requirements.isVisibleFace = visibleChecks.every(v => v === true);

}

// Use results to compute a "score" between 0 and 1

// Zero means no requirements are met; 1 means ALL requirements are met (perfect score)

// We actively ignore `errorMessage`, `score` in calculation because they're never boolean

const values = Object.values(requirements);

requirements.score =

values.filter(e => e === true).length / (values.length - 2);

return requirements;

};The returned requirements are then used to inform the user if their photo is acceptable or not.

The boolean values are used to change the icon displayed next to each requirement. This photo fails the \"in focus\" requirement but passes the others.

The boolean values are used to change the icon displayed next to each requirement. This photo fails the "in focus" requirement but passes the others.

And there we have it.

We’ve added AI to an app and used it to improve UX!

That wasn’t so hard was it?

Summary

As we’ve seen, you don’t need to be an AI expert to take advantage of the benefits it can provide.

Jimi Hendrix didn’t make his own guitar, van Gogh didn’t make his own brushes, and you don’t need to build your own AI models.

Let other companies build them for you.

All the usual suspects are doing it and offer AI APIs:

By leveraging their collective knowledge, you can focus on what you do best: building the frontend and improving your users’ experience.

Remember, if you can use an API you can do AI.

So what opportunities can you find to improve UX with AI?