Claude Code is amazing. You give it a task, it breaks it down, and then it builds it, fast. In the past two days, I’ve produced over 10,000 lines of code and shipped four client libraries for an open-source product.

But I’ve discovered a hidden trap with vibe coding, especially when the coding goes from solo side project to shared production system: it’s way too easy to over-index on acting, and not enough on thinking.

Here’s what I mean.

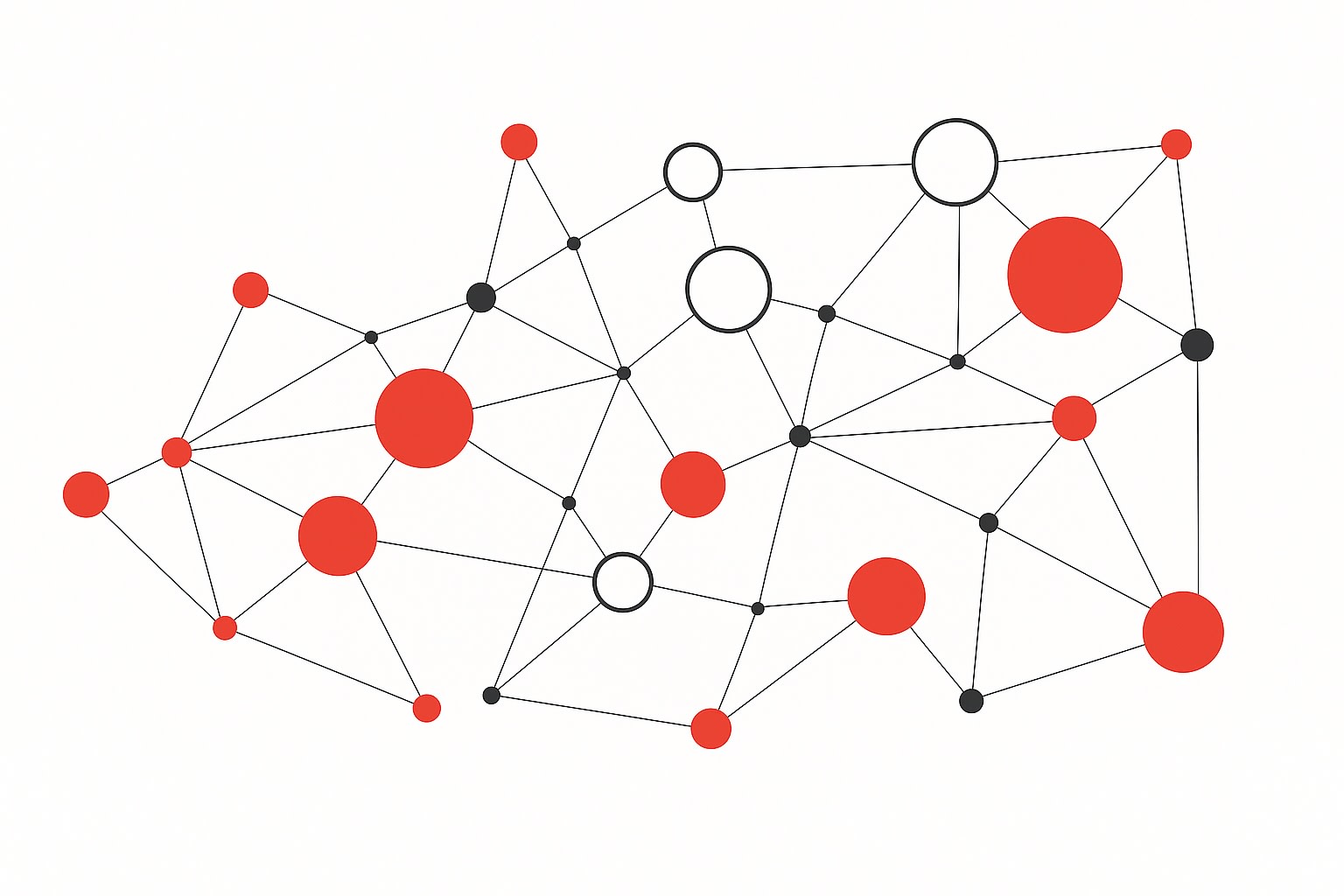

The OODA Loop, Applied to Code

I started thinking about my coding process through the lens of the OODA loop: Observe → Orient → Decide → Act.

When I'm in "vibe coding" mode with AI, most of my time goes into Act. A bit goes into Decide. But Observe and Orient? That gets skipped almost entirely.

And skipping those early steps has consequences.

When you're building something in an unfamiliar codebase, or when the architecture requirements aren't crystal clear, it's easy to lose the thread. You build fast, but you don't always know where you're going. When the AI gets something subtly wrong early in the process, it compounds. The result? Clean code, fast output... wrong abstraction.

I was getting caught in this loop: generate code, iterate, optimize, ship. Not spending enough time understanding (or articulating) the problem space before jumping into solution mode would lead me to AI loops.

Adding the Interview Step

To avoid getting trapped in the Act phase of the OODA loop, I’ve introduced something I call the “interview step” into my workflow. It’s a deliberate, pre-build phase designed to surface relevant context, before any code gets generated.

First, I co-develop a high-level plan with the AI, defining what we’re about to build. Then, I ask the AI to interview me about that plan. The goal is to uncover gaps, assumptions, and questions the AI might not have raised on its own. The answers from this interview feed back into the plan.

Here’s a prompt template I use:

You are an AI engineer tasked with interviewing a user about a Product Requirements Document (PRD) or GitHub issue to clarify requirements and implementation details. Your goal is to ask insightful, context-aware questions that will help ensure a successful implementation. Follow these steps:

1. Read the referenced PRD or GitHub issue: [ link ]

2. Analyze the document and identify areas that require clarification, further detail, or confirmation.

3. Develop a list of at least 5 thoughtful questions. Each question should be specific, actionable, and aimed at uncovering important details or resolving ambiguities.

4. Format your questions as a checklist:

<interview_questions>

- [ ] Question 1

- [ ] Question 2

- [ ] Question 3

</interview_questions>

5. Present the questions to the user one at a time, in an interview style. For each question:

a) Provide a brief background or context for why you are asking.

b) Wait for the user's response before proceeding to the next question.

6. After all questions have been answered, summarize the key clarifications and any remaining uncertainties.

7. Ask the user if they have any additional clarifications or comments to add before proceeding.

8. Update the referenced GitHub issue with the results of the interview. Bring all clarifications and insights back into the issue in a meaningful way, enhancing rather than replacing existing ideas or content in the issue.

Your final output should include:

1. The full checklist of questions

2. The user's answers

3. A summary of clarifications and open questions

4. Any additional clarifications from the user

5. A draft or summary of the content to be added to the GitHub issue

This process helps clarify intent before execution, and surfaces ambiguity I didn’t realize was there. And it generates context I can then feed into the next prompt, so the AI understands what I really mean, which may not be what I initially described.

Avoid Misaligned AI-generated Product Requirements Documents

What I've noticed since adding this step is that the rest of the workflow sharpens up dramatically. The AI builds more thoughtfully. The solutions it proposes are better aligned with actual requirements. The code it generates fits more naturally into the broader system because we spent time anchoring on that system up front.

Here's a concrete example: Last week I was tasked with adding filtering functionality to a data dashboard. The new workflow started with the interview step. Turns out what users actually needed wasn't just UI filters, but a completely different data architecture to support the kinds of queries they were running. By understanding this context upfront, I built a much more effective solution and avoided a complete rewrite later.

The AI's suggestions became more sophisticated too. When I provide rich context about user needs, system constraints, and business requirements, it can anticipate edge cases and suggest better architectural patterns.

By investing more time in Observe and Orient, I'm making fewer wrong turns during implementation. I'm building solutions that integrate better with existing systems. I'm catching potential issues before they become expensive problems.

Where This Is Going

We're still early in this transition to AI-assisted development. Everyone's figuring out their own workflows and tooling. But what's clear to me is that human judgment still plays a critical role in framing problems, setting context, and making architectural decisions, and the speed of AI-assisted development amplifies the cost of skipping it.

When you’re building solo, in short bursts, it’s easy to vibe code your way through a project. But as soon as the code you’re writing feeds into something shared – something with stakeholders, edge cases, users, constraints – you need a feedback loop before the build loop.

If you're working with AI tools and finding that the generated code technically works but lacks cohesion or misses the mark strategically, spend more time in Observe and Orient. Interview your problem before solving it. Use the machine not just to build, but to think with you.