Measuring Performance

For each optimization attempt, we compared the performance of:

- the `main` branch of the platform without the new optimization

- the feature branch with the optimization implemented

- the existing REST-ish API as a baseline

We used multiple tools to measure performance and help decide what changes to try.

Load Testing Tools

We used an internal tool for blasting thousands of requests at our API servers in a dedicated testing environment. Following the test, the tool reported various response time measurements, including average, median, and percentiles. This gave us a high-level view of how our changes performed over many requests.

Application Performance Monitoring Tools

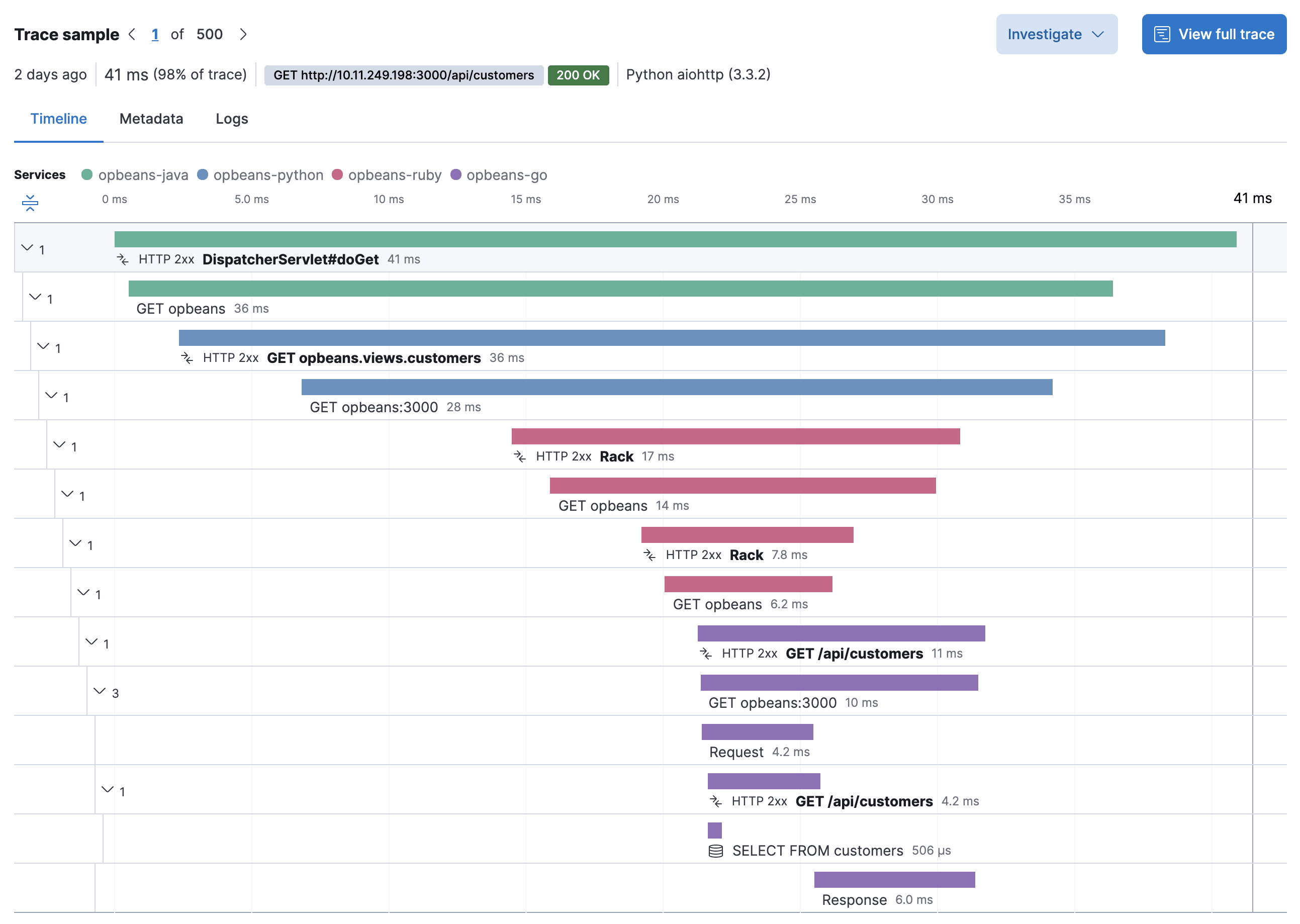

Our APM tool let us dig into individual requests and visualize a single GraphQL query as a series of database queries, async operations, and other steps on a timeline. This gave us insights into which parts of the resolvers took the most time and where bottlenecks were.

An example of an Elastic APM trace

ElasticSearch's Reporting

Our primary database was ElasticSearch, which includes timing information with each response in the `took` field.

{

"took" : 11,

"timed_out" : false,

"_shards" : {

...

},

"hits" : {

...

}

}`took` shows how long queries take within the database itself (typically 7–20ms). Combined with our APM tools, we could determine whether a particular DB query was slow, or if there was a problem in our infrastructure or code based on how long a query took round trip (usually 30–60ms in our case).

Ideas that didn't work

Not every optimization attempt yielded significant improvements. It's important to document these to avoid repeating unsuccessful approaches.

JS Runtime Replacements

We experimented with replacing our Node.js runtime with Bun, which yielded less than a 5% performance improvement and introduced some small incompatibilities with older NPM packages. Similarly, upgrading to Node.js v22 also resulted in less than 5% improvement. We skipped testing Deno based on the minimal gains from other runtime changes.

Web Server Replacements

We tried replacing Express with uWebSockets, but saw no meaningful improvement. This confirmed that Express wasn't the bottleneck in our system.

Other Minor Optimizations

Several smaller optimizations showed no or minimal improvement in response times:

- enabling Automatic Persisted Queries (APQ) surprisingly resulted in either the same or slightly worse performance

- various micro-optimizations – reducing promises, minimizing object/array creation, and streamlining loops showed no noticeable improvement

- being more selective about which fields we retrieved from the database did reduce the amount of JSON being processed and reduced response times, but the gains weren't worth the added complexity in most cases. Our DB field names did not map 1:1 with GraphQL field names, and sometimes returning 1 field from the GraphQL field required fetching multiple seemingly-unrelated DB fields which made this challenging to maintain

Rejected Ideas

Some promising ideas were turned down before implementing them.

Rewriting in Go

A team member prototyped a single GraphQL resolver in Go and achieved 40% faster response times with significantly reduced CPU and memory usage than the Node.js. However, we didn't have a team ready to start writing Go code, so this approach was shelved.

Replacing Apollo GraphQL with Mercurius GraphQL

While Mercurius offered potential performance benefits, it lacked features that were built into Apollo. The team was reluctant to rewrite the code that depended on these features, so we decided against this change.

Replacing GraphQL Shield

Turning off GraphQL Shield improved performance, but we could not ship this change. We considered replacing GraphQL Shield with a custom solution but determined it wasn't worth the effort early in the optimization process and would likely be too error-prone.

Long-lived Caches for Third-Party Information

A large part of the GraphQL API's purpose is fetching information gathered from third party sources which are heavily regulated. Organizations providing these information sources can take legal action if we present cached information that isn't up-to-date with their compliance rules. The team was concerned that invalidating caches on rule changes would make the system too complicated, so we left it alone.

Ideas that worked

Fortunately, several optimization approaches did yield significant improvements.

Swapping Apollo Server for Yoga

Replacing Apollo Server with Yoga resulted in a 15% (13ms) improvement in benchmarks and a 2-14% (7-40ms) gain in production. This change also gave us access to many useful plugins in the Yoga ecosystem.

Replacing Apollo Gateway with Apollo Router

Switching from Apollo Gateway to Apollo Router provided a 6% (5ms) improvement in benchmarks and a 9% (27ms) gain in production. This change also decreased memory usage, allowing us to reduce our server count and save on costs. There was less code to maintain, although writing plugins in the Rhai scripting language proved challenging. It's worth noting that some features are behind paywalls since this is a commercial product. We’re ok with this trade-off for now and will go without the paid features.

Increasing the # of vCPUs/threads

A remnant of the project's early experimental days was that the containers for the GraphQL servers only had 1 thread or vCPU. Adding a second thread allows Node.js I/O, garbage collection, and container-level processing to run in a separate thread from JavaScript execution. With only one thread, JavaScript is blocked by all other CPU duties. This change resulted in a huge 27% performance boost on one benchmarked query.

GraphQL Resolver Improvements

We made several improvements to our GraphQL resolvers:

- removing redundant database queries within resolvers yielded a 20% improvement in one query

- parallelizing database and other requests that were previously executed serially reduced wait times

- in some cases, extracting the GraphQL fields requested by our clients and using that to limit the fields selected by the DB yielded significant performance improvements. Reducing this overfetching reduced the amount of JSON being serialized, deserialized, and garbage collected, resulting in a 40% performance boost on one particularly overfetch-y query

Long-lived Caches for First-Party Data

While regulations prevented us from caching 3rd party data (as described above), we could cache reports we generated ourselves from 3rd party data. In some benchmarks, retrieving these reports from Redis by key was 10 times faster than querying ElasticSearch. We still need to benchmark this approach to see how it improves overall GraphQL query performance, and we need to determine if the cache hit rate will be high enough to justify the complexity and storage.

Key Learnings from this Experience

Our experiments taught us several valuable lessons:

Generalized Benchmarks can be Misleading

Benchmarks for runtimes like Bun, Deno, and web servers like Fastify or uWebSockets tout how little overhead the runtime or library adds to a request compared to alternatives. However, in our case, the overhead from web servers and runtime environments represents only a tiny fraction of the time our GrapQL API needs to fulfill a request. These benchmarks might be more relevant for APIs handling much higher request volumes or much simpler queries.

Real-World Workloads Are Essential for Accurate Benchmarking

We often test changes by repeatedly sending the same HTTP requests to our servers thousands of times because it is easy to set up, but it can lead to misleading results.

- it makes caching appear more effective than it actually is due to artificially high hit rates

- databases behave differently under real-world loads with a variety of queries rather than repeated queries

- a wider variety of requests & responses might trigger Node.js garbage collection differently

The more improvements we ship to the production, the more we learn about weaknesses in our testing.

Layered Architecture Facilitates Experimentation

Testing different optimization strategies is easy due to our layered architecture:

- the web server is separate from the GraphQL server

- the GraphQL server is separate from the GraphQL resolvers

- the GraphQL resolvers are separate from the business logic

- the business logic is separate from the data manipulation layer

- the data manipulation layer is separate from the database query layer

This separation allows us to experiment with changes at different levels without disrupting the entire system.

Looking Forward

Our experience optimizing this GraphQL API has shown that while some common optimization strategies might not yield the expected results, a methodical approach to testing and measuring performance can lead to substantial improvements. By focusing on the areas that truly impact performance and being willing to challenge assumptions, we've significantly improved our API's response times and resource utilization.