If I told you that you could provision the necessary infrastructure for your frontend application by running two commands, would you believe me? If you’ve read our previous blog, Setup Continuous Deployment of Your Nodejs App in a Highly Available Environment, you already know the answer is yes. If not, in this tutorial, we’ll take a deep dive into how to write down the necessary infrastructure (as code) with Terraform to host a frontend app in AWS S3 delivered via CloudFront with a custom domain and subdomain. We will then implement a CI/CD pipeline that allows you to validate and push code reliably to your environment effortlessly.

Requirements

- Access to an AWS account and a programmatic user.

- Download and install Terraform (I used v0.11.7.)

- A registered domain and an AWS hosted zone.

- A GitHub account.

The Infrastructure

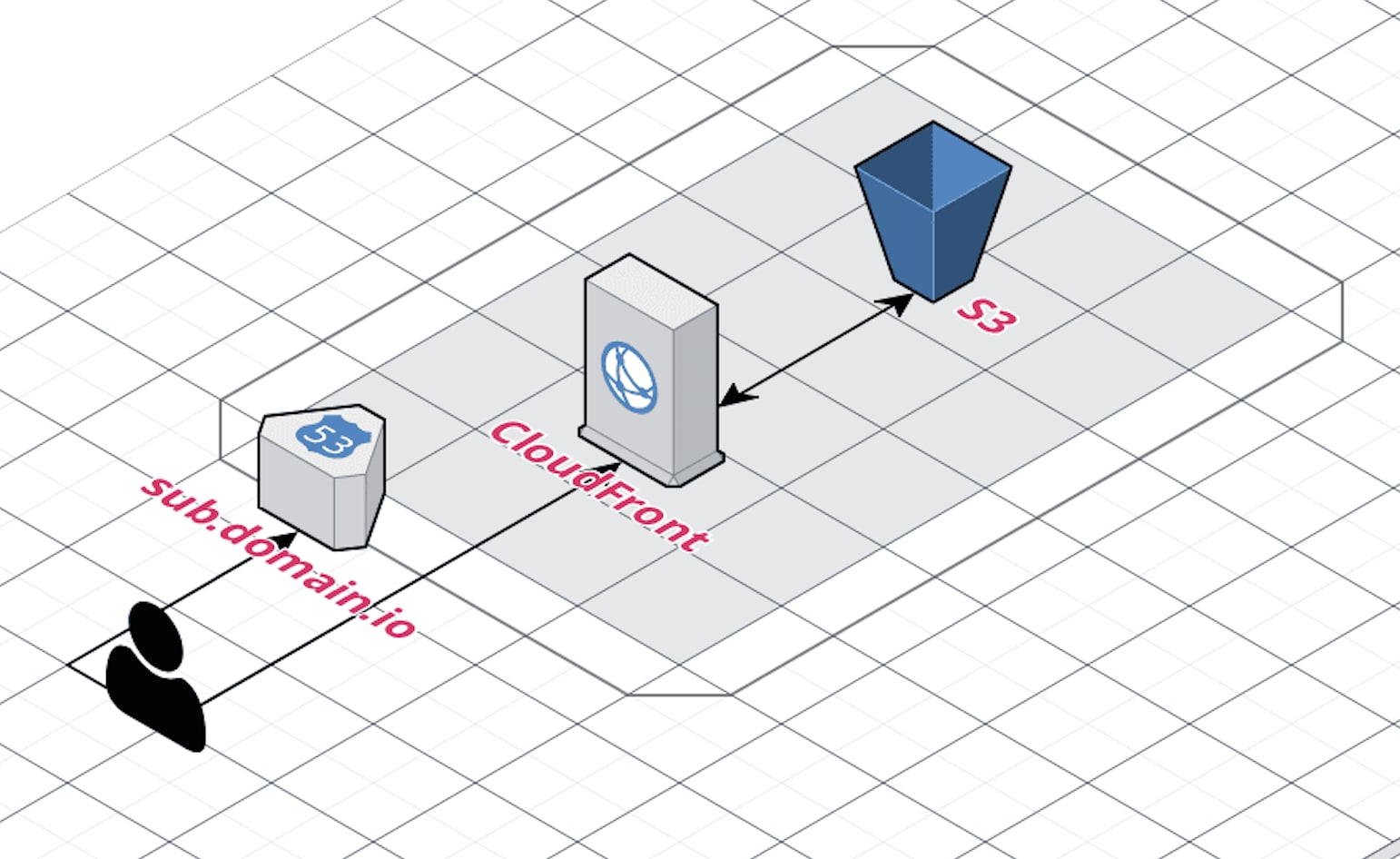

Let’s begin by plotting our solution. Use the following 3 AWS resources:

Your solution should look like this:

Now let’s take a look at our Terraform files (you can find them here. For the purpose of this tutorial, we’ll ignore the provider.tf and backend.tf files and focus on relevant details of each AWS resource that we use.

About s3bucket.tf

Restricting access to the S3 buckets is one of the many security best practices that we apply. We will only allow actions performed by our programmatic user. At the same time, we want to be able to serve the site only via our domain name instead of allowing people to access our files via s3://mybucketname/index.html. This means we need to restrict the ListBucket and GetObject actions so that they only respond to requests coming from our cloudfront resource. Hence, the following policy:

"Id": "bucket_policy_site",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "bucket_policy_site_root",

"Action": ["s3:ListBucket"],

"Effect": "Allow",

"Resource": "arn:aws:s3:::${var.s3_bucket_name}",

"Principal": {"AWS":"${aws_cloudfront_origin_access_identity.origin_access_identity.iam_arn}"}

},

{

"Sid": "bucket_policy_site_all",

"Action": ["s3:GetObject"],

"Effect": "Allow",

"Resource": "arn:aws:s3:::${var.s3_bucket_name}/*",

"Principal": {"AWS":"${aws_cloudfront_origin_access_identity.origin_access_identity.iam_arn}"}

}

]

}

About cloudfront.tf

In combination with our s3 policy we need to create an origin access identity and attach it to our CloudFront distribution. This ensures that our S3 bucket will only respond to requests coming via CloudFront.

# …

origin {

domain_name = "${aws_s3_bucket.site.bucket_domain_name}"

origin_id = "${var.s3_bucket_name}"

s3_origin_config {

origin_access_identity = "${aws_cloudfront_origin_access_identity.origin_access_identity.cloudfront_access_identity_path}"

}

}

# …

}

resource "aws_cloudfront_origin_access_identity" "origin_access_identity" {

comment = "Origin Access Identity for S3"

}

Because we will be dealing with a single page Angular application running in S3 we delegate all the routing to the app.

# …

custom_error_response {

error_code = 403

response_code = 200

response_page_path = "/index.html"

}

custom_error_response {

error_code = 404

response_code = 200

response_page_path = "/index.html"

}

# …

About route53.tf

Configuring route53.tf is actually pretty simple. Create a DNS record that points at our CloudFront distribution and set the zone_id to Z2FDTNDATAQYW2 according to Amazon’s documentation.

zone_id = "${data.aws_route53_zone.primary.zone_id}"

name = "${var.subdomain}.${var.domain}"

type = "A"

alias {

name = "${aws_cloudfront_distribution.site.domain_name}"

zone_id = "Z2FDTNDATAQYW2" # CloudFront ZoneID

evaluate_target_health = false

}

}

Now that we have an idea of what’s going on in these files let’s configure the project and create our infrastructure. Create a file called terraform.tfvars (I added a template of it to the project in the form of terraform.tfvars.template) in the root of the project with the following content and replace the values that apply to you. It’s very important you do not commit this file to your repository as it will contain AWS access keys and secrets.

project_key = "terraform-frontend-tutorial"

aws_access_key = "XXXXXXXXXXXXXXXXXXX"

aws_secret_key = "XXXXXXXXXXXXXXXXXXX"

aws_region = "ca-central-1"

s3_bucket_name = "tutorial.yourdomain.io"

s3_bucket_env = "development"

domain = "yourdomain.io"

subdomain = "tutorial"

hosted_zone = "yourdomain.io"

We can now execute our Terraform project by running:

$ terraform init

$ terraform apply

Note: It is a best practice to run terraform plan before terraform apply to see the details of what will be performed.

The CloudFront distribution usually takes about ~15 minutes to deploy so keep in mind that a delay is normal. Other than that, we are done spinning up our entire front-end infrastructure. Any changes to it must be applied via the Terraform project and ideally go through a code review process. Now let’s upload our app to S3.

Setting up the CI/CD pipeline with CircleCI

If you’re not familiar with CircleCI I recommend taking a look at their overview page and then coming back here to continue with the rest of the setup (linking your Github account with CircleCI is as easy as logging in to CircleCI with your Github credentials). We will be using the Angular Tour of Heroes tutorial app and set up a CI/CD pipeline for it. Get started by downloading the sample app and push it to your GitHub repository.

To configure our pipeline we just have to create a .circleci/config.yml in the root of our project. I’ve done that for you already so let’s take a look:

About .circleci/config.yml

defaults: &defaults

working_directory: ~/repo

version: 2

jobs:

build:

<<: *defaults

docker:

# specify the version you desire here

- image: circleci/node:10-browsers

steps:

- checkout

# Download and cache dependencies

- restore_cache:

keys:

- v1-dependencies-{{ checksum "package.json" }}

# fallback to using the latest cache if no exact match is found

- v1-dependencies-

- run: npm ci

- save_cache:

paths:

- node_modules

key: v1-dependencies-{{ checksum "package.json" }}

# run tests!

- run: npm run lint

- run: npm run e2e

- run: npm run build

# Persist entire project directory to the next job

- persist_to_workspace:

root: dist

paths:

- "**"

deploy:

<<: *defaults

docker:

# specify the version you desire here

- image: joseguillen/awscli-container

steps:

- attach_workspace:

at: ~/repo

- run:

name: Configure AWS CLI

command: aws configure set default.region ${AWS_DEFAULT_REGION}

- run:

name: Deploy entire directory

command: aws s3 cp . s3://${BUCKET_NAME} --recursive

# ...

As you may have noticed, the pipeline is split into two stages: the build job and the deploy job. The build job essentially checks out our app, downloads its dependencies, runs all its tests, and builds the app.

Note: To ensure that we can run end-to-end tests in CircleCI the Docker image used for the job must have a browser installed. Fortunately, CircleCI’s library of Docker images provides us with many options. In our case, we used node:10-browsers.

The deploy job is in charge of uploading the built app to our S3 bucket. I’ve gone ahead and created a custom Docker image that already contains the AWS CLI in it. You can add your AWS credentials to the CircleCI project settings, and while you’re there you can configure a couple of environment variables in the Environment Variables section:

AWS_DEFAULT_REGION = ca-central-1

BUCKET_NAME = tutorial.yourdomain.io

Now every time you commit something to your master branch it will trigger a build and a deployment. All other branches will only trigger a build (take a look at the workflow section of the config.yml file).

Conclusion

Now that you’ve completed the tutorial you should have a) all your infrastructure as code, where every change can be easily reviewed, tested, and documented, and b) a CI/CD pipeline with end-to-end testing for a single page application complying with DevOps principles. Take this and adapt it to your own projects and streamline your development process in the most reliable way. Lean on Pull Request conditions and the Code Review process to fortify your codebase and if you want to take this to the next level, consider implementing Amazon Certificate Manager to enable SSL on your site, and migrate your terraform state file to a shared & secure location like S3 to enable collaboration.