Let's say you have a moderately complex website coming together.

This website has a few different stakeholders, so there are multiple integrations, each requiring their own set of technologies to enable them.

The first, and arguably your most important feature, is your overall Web Vitals. Not only will these metrics indicate how fast your website loads, they'll also affect ranking on Google in the coming year.

With this first feature in mind, you’ve been careful to follow best practices around achieving performance on the modern web. The specific performance requirements will change based on your website and its architecture, but a baseline checklist for this might include:

- Serve encoded assets with gzip or brotli

- Use preload links for critical styles, scripts and fonts

- Apply a font-display strategy on any of your @font-face declarations (using woff2)

- Preconnect any third party URLs to reduce the cost of RTTs

- Optimize and resize images to progressive formats (ex. WebP, JPG 2000/XR)

- Chunk & lazy-load your codebase by route or component (assuming this is an SPA)

- Serve over HTTP/2 for concurrency & header compression

- Serve assets over a CDN

Despite all your best intentions, you find that your website is slower than you’d expect. Look at all the work you’ve already done! Still, the form you have on the landing page isn't interactional for a couple seconds longer than you’d like, and your menu can’t be opened early enough, either.

This could be caused by a number of items, but it’s likely that your main thread is simply too busy with unscheduled Long Tasks. With third-party integrations to implement, we can’t always control all the code our website runs. There are lots of integrations that pile up: a tag manager, chat widget, or perhaps it's simply an expensive API call.

Whatever it is, these dependencies often introduce more than one subsequent dependency, which in turn produce prolonged script evaluation—usually followed by paints and layouts—which over-works the main thread, blocking all interactions as a result.

The issue here is priority, so you can try using a native solution like the importance attribute. The tradeoff here is that you're deferring authority to the browser on exactly when the resources are loaded—plus, this feature is very much still experimental. There are instances where greater control in needed, and cross-browser concerns should be accounted for more broadly.

Enter: late tasks

Using tools from the Background Tasks API, we can schedule our low-priority scripts containing uncontrolled or expensive work as “late tasks” in order to protect the Critical Rendering Path and keep our Web Vitals in check.

What we’re doing here is this: We want to prioritize important tasks (like building my DOM, CSSOM, loading fonts, etc.), and defer less important work, like loading code that is farther down the page, tracking tools or widgets that do not need to be loaded up front.

One thing to note before getting started: Check if any of your third-party scripts depend on the window.onload event. If they do, then you will need to modify them to use another event or trigger. As a late task, these will be scheduled to occur after the window.onload event has triggered.

Our example problem

We have a resource-intensive task that’s blocking what is currently on-screen but isn’t immediately visible itself.

I’ve made a naive example that appends 2000 elements to the DOM after a brief calculation on each cycle, not unlike what might happen on a larger page after initializing a framework, requesting data from an API and then rendering the results to the page.

// count-appender.js

function countAppender() {

let index = 1;

const target = 2000;

while (index <= target) {

const random = ((Math.random() * 100 * index) / index) * window.innerWidth;

append(random);

index++;

}

}()

function append(content) {

const element = document.createElement('p');

element.innerText = content;

const target = document.getElementById('appendZone');

target.appendChild(element);

}// index.html

<html lang="en">

<head>

< … />

</head>

<body>

<div id="root"></div>

<div id="appendZone"></div>

<script src="%PUBLIC_URL%/count-appender.js" async defer></script>

</body>

</html>// App.jsx

function App() {

const [query, setQuery] = useState();

const onChange = (event) => setQuery(event.target.value);

const submit = (event) => {

event.preventDefault();

console.log(query);

}

return (

<div className="above-the-fold">

<form onSubmit={submit}>

<h2>Unscheduled Searcher</h2>

<input

value={query}

onChange={onChange}

className="App-input"

placeholder="Enter your search" />

<button type="submit">Search</button>

</form>

</div>

);

}

export default App;We’ll be using Google PageSpeed Insights for our initial auditing. I’m testing against a deployed instance because it will give truer metrics on latency conditions, although you should keep in mind that these tests can have a high variance, so don’t rely completely on just one run.

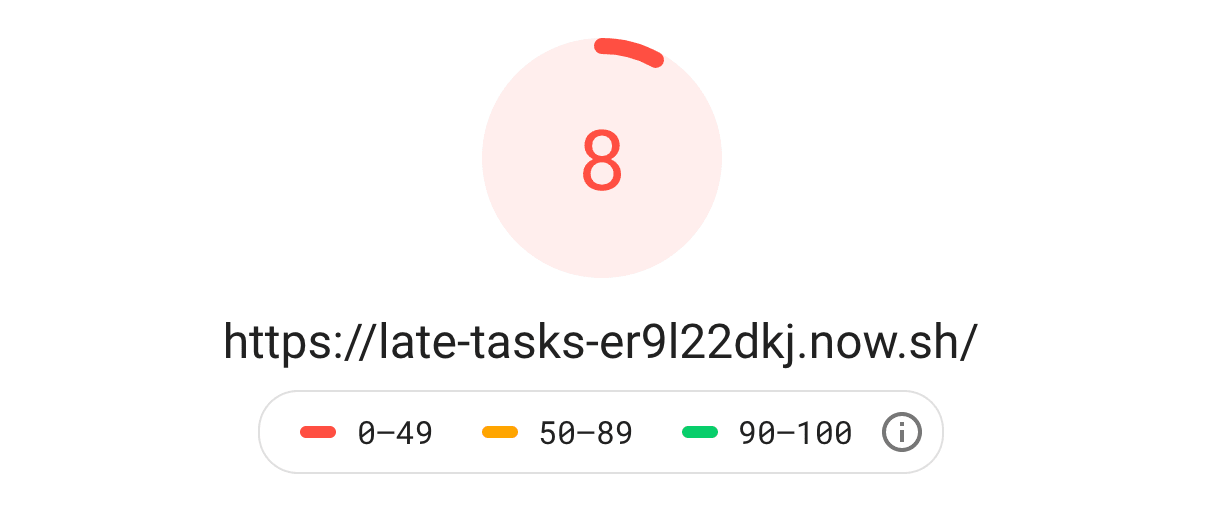

Unscheduled score (baseline)

This is our baseline that's derived from the mobile metric, which is the more challenging metric to get a high score in because it uses an average network connection and average device for the test. This is a good thing. We should always perform our audits with real-world conditions in mind, as it gives us insight into possible real-world performance, as well as the opportunity to see where our longest-running code is more clearly.

In this case, we already know our long-running task is the 2000 nodes being added below the fold. We'll employ two strategies to attempt to address this.

Strategy #1: requestIdleCallback & script injection

This approach instructs the browser to only deal with a given task once it is idle by using the requestIdleCallback API. Effectively, this gives any interaction that occurs on the page a chance to get onto the stack during the load, and thus allows it to be handled before the additional work of loading the rest of the page is undertaken.

// load-script.js

export const loadScript = (src) => {

new Promise((resolve, reject) => {

const script = document.createElement('script')

script.addEventListener('load', () => resolve(script))

script.addEventListener('error', () => reject(new Error(`Failed to load script: "${src}"`)))

script.async = true;

script.defer = true;

script.src = src;

document.head.appendChild(script);

});

}// App.jsx

function App() {

const [query, setQuery] = useState();

const onChange = (event) => setQuery(event.target.value);

const submit = (event) => {

event.preventDefault();

console.log(query);

}

useEffect(() => {

window.requestIdleCallback(() => loadScript('/count-appender.js'));

}, []);

return (

<Fragment>

<div className="above-the-fold">

<form onSubmit={submit}>

<h2>Idle Callback Searcher</h2>

<input

value={query}

onChange={onChange}

className="App-input"

placeholder="Enter your search" />

<button type="submit">Search</button>

</form>

</div>

<div id="appendZone"></div> {/* <- no longer on the index.html */}

</Fragment>

);

}

export default App;

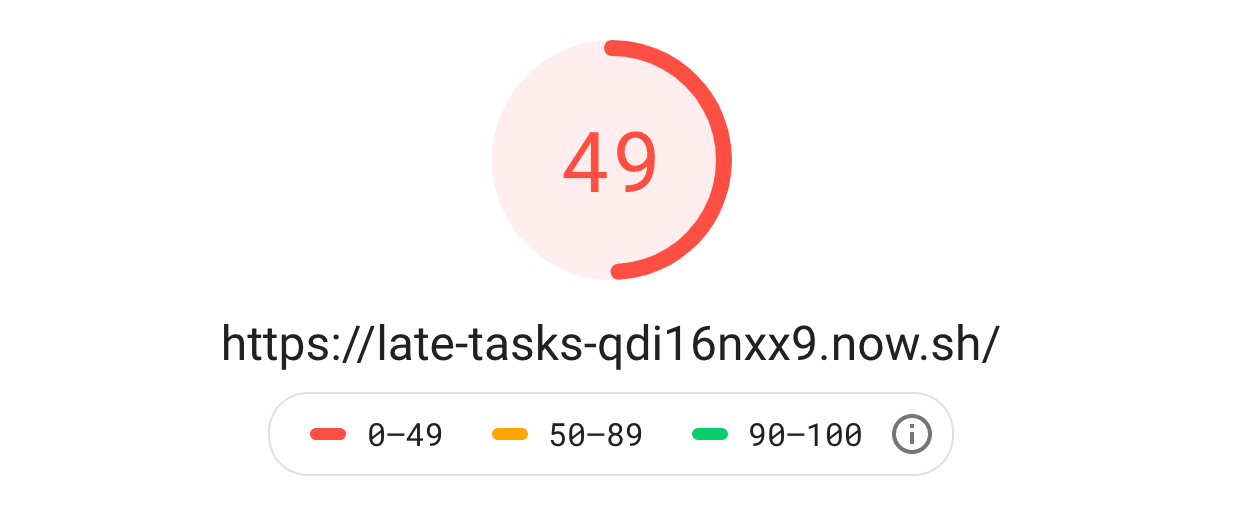

Scheduled with requestIdleCallback

The result is much better than before, but not as great as we might have hoped for. While we see a pretty dramatic improvement, we’re still paying the price of the 2000 nodes running as a late task. PageSpeed takes late tasks into account when calculating the score because even late tasks can take up the main thread and block the user when they occur.

"Perfect performance does not mean perfect UX, and not every change that improves the UX will improve performance." Patrick HulcePatrick Hulce

We’ve improved the UX by prioritizing the interaction during load, but we’ve also delayed the process of loading the nodes to the page, which itself makes the overall load time slightly increase. It’s a tradeoff, and the metrics that you get from these reports do not account for the nuance of the solution. These performance tools are not strictly measuring experience – they're measuring performance with an opinion on experience.

Note that this API is still experimental and browser adoption is not 100%, so you should never use it without applying a shim for it, and use it carefully when you do. This will fall back to using setTimeout or some other similar mechanism, which will force a schedule that could conflict with user interaction in some situations. Still, as a progressive enhancement, it’s better than nothing and this allows us to make a better experience for the users that have modern enough browsers.

Strategy #2: Intersection Observer & script injection

While not precisely a late task, this is the most extreme way to eliminate the cost of our long task. The Intersection Observer API allows us to detect when an element has entered or is about to enter the viewport. This gives us the control needed in order to simply delay loading the script until the content is about to come into view.

The use-cases for this approach can be more limited than the previous approach because the user experience can be negatively impacted by elements jumping onto the screen in high-latency circumstances. This will cause Layout Shift, which is something that should be avoided at all costs.

A common use-case would be for loading an image farther down the page. Although, you should probably consider using native solutions for this now.

A less common application might be on a chat widget. For instance: perhaps we don’t need the widget loaded up front. Instead, we could set an element just off-screen that triggers the chat widget to be fetched and added to the DOM when scrolling occurs. This makes the chat widget “appear” after interaction, and allows the initial load to be unburdened with the widget’s dependencies and script evaluation.

We’re going to use a package to manage our instance of Intersection Observer called react-intersection-observer. You can write it on your own, but you then need to manage your observer references manually, which can add more complexity than we should cover here.

// App.jsx

import { useInView } from 'react-intersection-observer';

function App() {

const [query, setQuery] = useState();

const onChange = (event) => setQuery(event.target.value);

const submit = (event) => {

event.preventDefault();

console.log(query);

}

const [ref, inView] = useInView({

threshold: 0,

triggerOnce: true,

});

useEffect(() => {

if (inView) {

loadScript('/count-appender.js');

}

}, [inView]);

return (

<Fragment>

<div className="above-the-fold">

<form onSubmit={submit}>

<h2>Observer Searcher</h2>

<input

value={query}

onChange={onChange}

placeholder="Enter your search" />

<button type="submit">Search</button>

</form>

</div>

<div id="appendZone" ref={ref}></div> {/* <- our target via ref */}

</Fragment>

);

}

export default App;

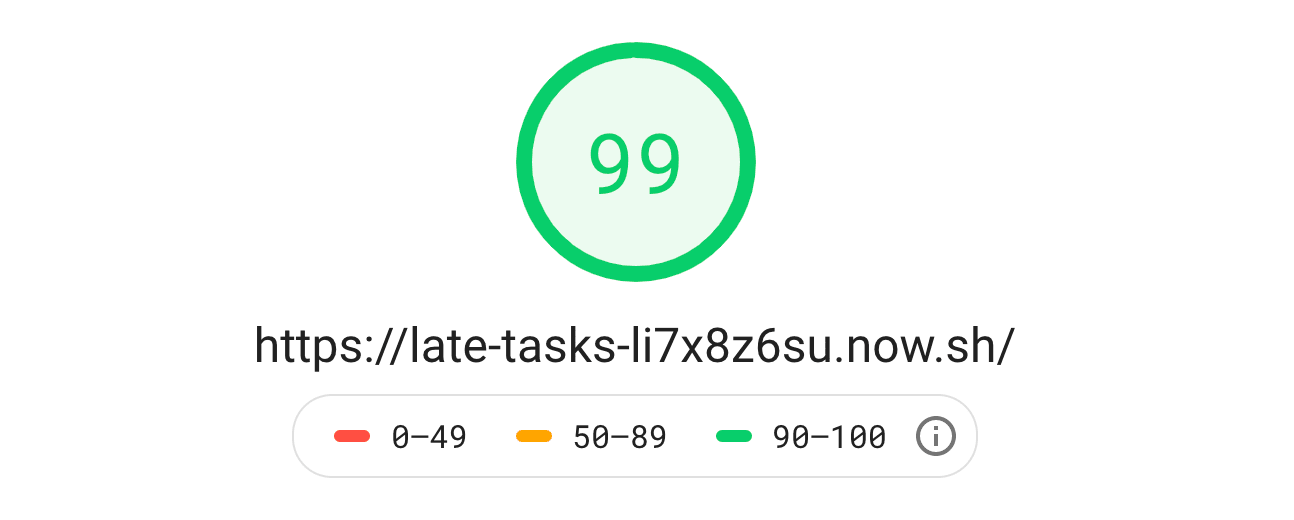

Scheduled with Intersection Observer

This is the sort of score we’re looking for!

In this specific example, the tradeoff is that the user might not be able to scroll immediately, as the content is fetched and rendered only once they attempt to scroll. This is a pretty severe user experience penalty, especially on slow network connections and devices with limited CPU.

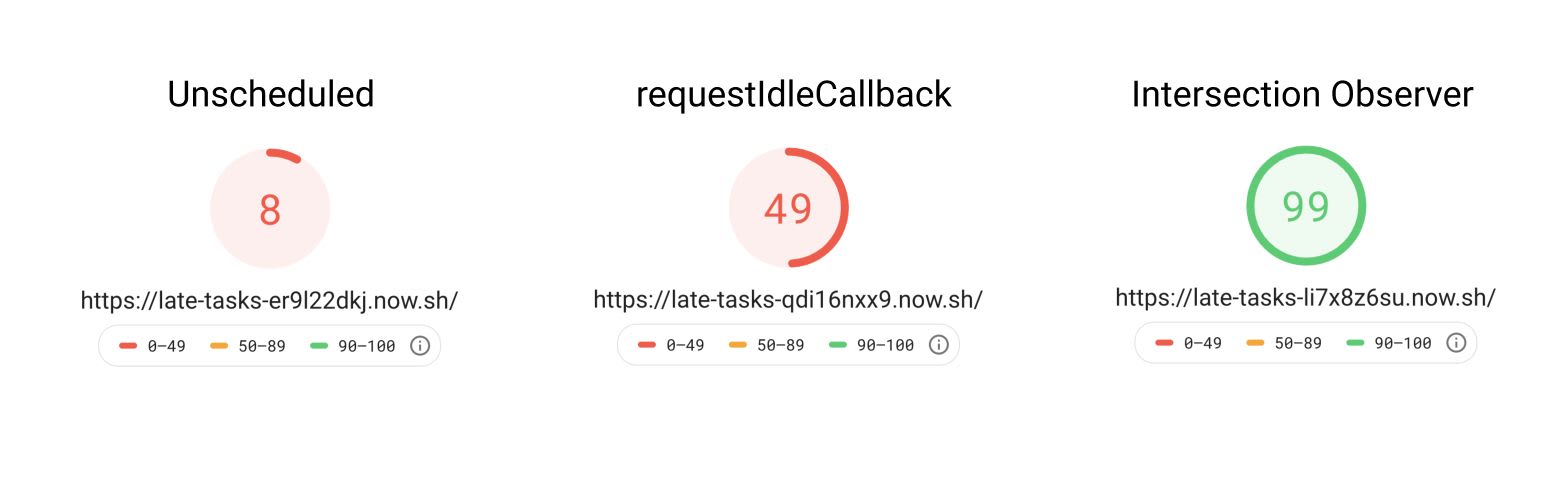

Let's review

Here are the overall outcomes of the three scenarios:

Google PageSpeed Insights metrics

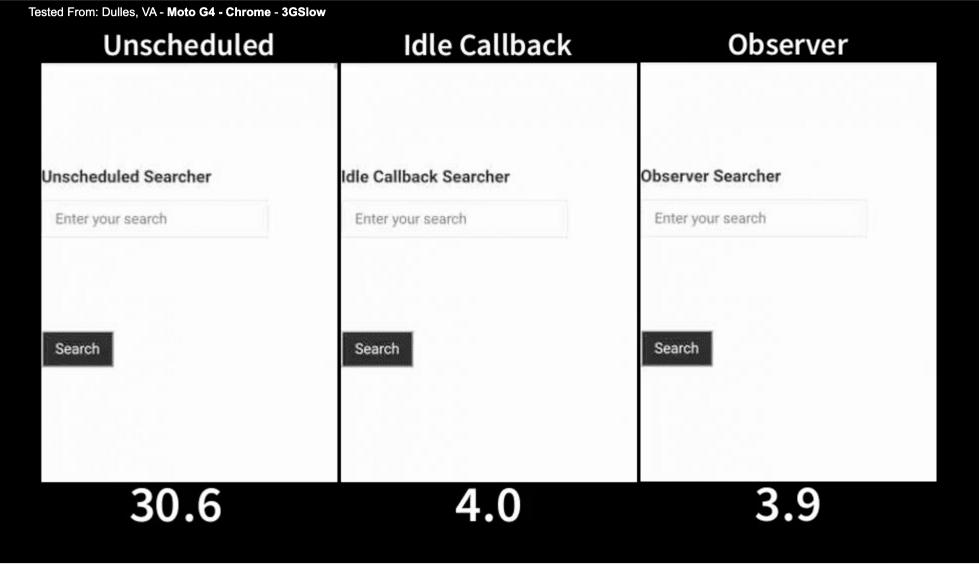

It's great to see the metrics here, but they don't tell us much about the experience of the different approaches. For another perspective, lets compare this with the metrics that WebPageTest gives us on a Moto G4 over a 3G Slow network connection:

WebPageTest metrics on Moto G4 over a 3G Slow network

Interesting - both approaches resulted in a load of ~4 seconds while their PageSpeed Insights score differs by 50 points!

This difference can be attributed to the evaluation time that was taken up by the callback and its response once the CPU had idled—a cost that the Intersection Observer defers to after the page load, when an interaction makes the target element scroll into view.

The lesson here is that we can't always be focused on the raw performance metrics. While the Intersection Observer may have a better score, it might also block the user from scrolling because it doesn't do the work of rendering the page ahead of their interaction.

I would go with Strategy #1 in this case.

It's a matter of balance: We must always weigh user experience and performance.

Takeaways

We've been on a JavaScript binge since the advent of Single Page Applications, which has been fuelled by more and more powerful devices to run our code. What's commonly lost in this feature-focused fervour is that not every device has the latest specs; not every network has the fastest connection.

Metrics such as Web Vitals take a great first step along the path to ensuring the best experience for everyone. It's our responsibility to be mindful of this and use our tools in service of that goal.