In part 1, we learned about Docker and created a Dockerfile that provides a suitable environment in which our application's services can run. If you recall, our sample application has two services, a Node backend API, and a React front-end. In part 2, we will use Docker Compose to orchestrate our application's services during development so that we can run our distributed application with a single command.

Orchestrating Services With Docker Compose

To orchestrate the services required for our application to run using Docker Compose, we will define each service in a docker-compose.yml. Let's start by defining our Node backend API; in the root of our cloned repo, put the following in a file with the name docker-compose.yml:

version: "3"

services:

# backend API on port 3000

api:

build: .

ports:

- "3000:3000"

volumes:

- ./api:/app

working_dir: /app

command: npm start

This instructs Compose to create a service called api (api:) using the Dockerfile in the current directory (build: .), map the container's internal port 3000 to the host's port 3000, mount /api in the container at /app, and upon execution, run npm start in the container and start our service.

With our docker-compose.yml defined, working with Compose is similar to that when working with a single Dockerfile; however, instead of docker, we use docker-compose. First we build our image:

$ docker-compose build

Building api

...

Successfully built $HASH

Installing Dependencies for Our Service

Since the environment within our container is most likely different than that of our host machine's, and the installation procedure for certain dependencies may vary under different operating systems, we will need to install dependencies from within the container. To accomplish this, we will use run:

$ docker-compose run api npm install

...

This runs the api service defined in our docker-compose.yml and executes npm install within the container, ensuring that the dependencies are installed against Ubuntu (and not our host's operating system).

Starting Our Application With Docker-compose Up

Soon, we will define our front-end service; run won't be so useful then, so instead we use up, which will start each service defined in our docker-compose.yml. Even though we only have one service, our back-end API, let's try and start our application anyway:

$ docker-compose up

Creating network ...

Creating docker_api_1

Attaching to docker_api_1

...

api_1 | Example API listening on port 3000

With the log output streaming to our shell, in a browser, navigate to http://localhost:3000/colors and, you will see:

api_1 | GET 200 /colors

Because we mounted /api within our container, we can edit /api/src/index.js in our host environment, and these changes will be detected by nodemon, automatically restarting our Node server.

api_1 | [nodemon] restarting due to changes...

api_1 | [nodemon] starting `node src/index.js`

api_1 | Example API listening on port 3000

It does not matter where we develop our application; Windows, Mac OS X, or your favorite flavor of Linux, our application executes in the same, consistent Ubuntu environment immune to the inconsistencies of our host environment.

Bring down our application by pressing Ctrl+C:

Gracefully stopping... (press Ctrl+C again to force)

Killing docker_api_1 ... done

$

Adding Our Front-end Service

To add our front-end service, we will follow the same flow as above. Begin by editing our docker-compose.yml:

version: "3"

services:

# backend API on port 3000

api:

build: .

ports:

- "3000:3000"

volumes:

- ./api:/app

working_dir: /app

command: npm start

# frontend on port 8080

frontend:

build: .

environment:

API_URL: http://api:3000

ports:

- "8080:8080"

volumes:

- ./frontend:/app

working_dir: /app

links:

- api

command: npm start

The definition for our front-end service (frontend:) has a few additional instructions. Since localhost will refer to the network within a container, communication between containers can be tricky. To help out, Compose exposes each service's network on a hostname defined by the service name. In our example app, we have two services: api and frontend. Thus, our front-end service can communicate with our backend API service through http://api:3000. To instruct our frontend service to use this address, we provide an environment variable that our service can access to determine the proxy destination:

environment:

API_URL: http://api:3000

We can use this environment instruction to supply any environment variables our service will need. We can also specify service dependencies with:

links:

- api

This instructs Compose to start our api service whenever our frontend service is started.

Running Our Distributed Application

We can now build our application, install the dependencies against the container's Ubuntu environment and bring up our application, now composed of both the back-end API and front-end services:

$ docker-compose build

Building api

...

Successfully built $HASH

Building frontend

...

Successfully built $HASH

$ docker-compose run frontend npm install

...

$ docker-compose up

Starting docker_api_1

Creating docker_frontend_1

Attaching to docker_api_1, docker_frontend_1

...

api_1 | Example API listening on port 3000

...

frontend_1 | webpack: Compiled successfully.

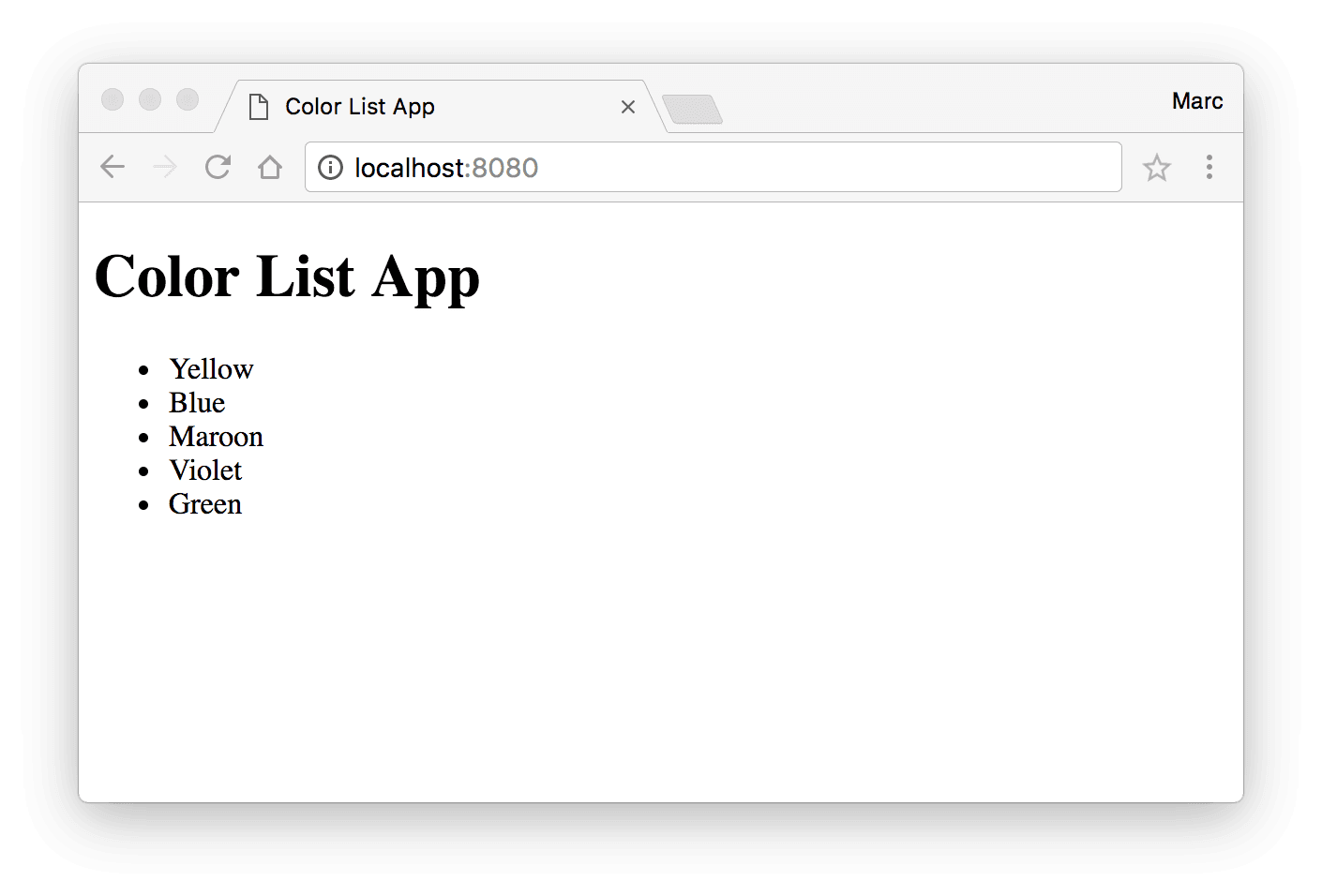

Navigate to http://localhost:8080 to see our distributed application running. webpack-dev-server renders our front-end React application and, through http://api:3000, requests the list of colors from our back-end API service that are then displayed in our React application. Because we have mounted /frontend within our container, any changes to our front-end code will trigger a webpack rebuild automatically, just like with our back-end service.

You can find a complete, working example here.

Just like with docker, we can also use docker-compose up -d to run our application detached from our current shell and docker-compose exec api bash or docker-compose exec frontend bash to bash into a running container. We can even stream logs from our running services to our shell with docker-compose logs -f.

Adding Additional Database or Redis Services

To add additional services, define them in our docker-compose.yml; for example, to add PostgreSQL and Redis to our distributed application:

version: "3"

services:

# PostgreSQL

postgres:

image: postgres

environment:

POSTGRES_USER: "admin"

POSTGRES_PASSWORD: "password"

POSTGRES_DB: "db"

ports:

- "5432:5432"

# Redis

redis:

image: redis

ports:

- "6379:6379"

# backend API on port 3000

api:

build: .

environment:

POSTGRES_URI: postgres://admin:password@postgres:5432/db

REDIS_URI: redis://redis:6379

links:

- postgres

- redis

...

Like the FROM instruction in a Dockerfile, the image: postgres and image: redis instructs Compose to use the latest images in the Docker repository for PostgreSQL and Redis, respectively. When consuming images with Compose, be sure to check the repository's information on Docker Hub for additional configuration instructions. For example, you can set username, password and database name via the environment: instruction for PostgreSQL images.

Also via the environment: instruction, pass in any connection information to services that need it. Our back-end API service does not connect to PostgreSQL or Redis now, but when we need to, we can simply use process.env.POSTGRES_URI and process.env.REDIS_URI to connect to those services, respectively. Finally, use the links: instruction to ensure that when our back-end API services starts, our PostgreSQL and Redis services start as well.

After editing our docker-compose.yml, run $ docker-compose up to automatically build our newly added services, and start our application.

Conclusion

We have learned about what Docker is, how it is different from virtual machines, and some associated terminology. We created a Dockerfile and learned how to work with it using docker build, run and exec. We extended our Dockerfile, creating a suitable environment in which our application's services, composed of a Node/Express back-end API and a React front-end, can run. Finally, we used Docker Compose, and docker-compose build and docker-compose up, to orchestrate our application's services for development.

Without Docker, our developer would have had to install and configure each service and dependency separately, being mindful of any incompatibilities introduced by his or her development machine's environment or the service and dependencies themselves. Now, onboarding our developer is accomplished by:

$ git clone ...

$ docker-compose build

$ docker-compose run api npm install

$ docker-compose run frontend npm install

$ docker-compose up

With Docker, we can ensure that our developer can run our application with a few simple steps and start contributing to our applications' development in minutes.